Время на прочтение

11 мин

Количество просмотров 118K

Еще одна статья о CUDA — зачем?

На Хабре было уже немало хороших статей по CUDA — раз, два и другие. Однако, поиск комбинации «CUDA scan» выдал всего 2 статьи никак не связанные с, собственно, алгоритмом scan на GPU — а это один из самых базовых алгоритмов. Поэтому, вдохновившись только что просмотренным курсом на Udacity — Intro to Parallel Programming, я и решился написать более полную серию статей о CUDA. Сразу скажу, что серия будет основываться именно на этом курсе, и если у вас есть время — намного полезнее будет пройти его.

Содержание

На данный момент планируются следующие статьи:

Часть 1: Введение.

Часть 2: Аппаратное обеспечение GPU и шаблоны параллельной коммуникации.

Часть 3: Фундаментальные алгоритмы GPU: свертка (reduce), сканирование (scan) и гистограмма (histogram).

Часть 4: Фундаментальные алгоритмы GPU: уплотнение (compact), сегментированное сканирование (segmented scan), сортировка. Практическое применение некоторых алгоритмов.

Часть 5: Оптимизация GPU программ.

Часть 6: Примеры параллелизации последовательных алгоритмов.

Часть 7: Дополнительные темы параллельного программирования, динамический параллелизм.

Задержка vs пропускная способность

Первый вопрос, который должен задать каждый перед применением GPU для решения своих задач — а для каких целей хорош GPU, когда стоит его применять? Для ответа нужно определить 2 понятия:

Задержка (latency) — время, затрачиваемое на выполнение одной инструкции/операции.

Пропускная способность — количество инструкций/операций, выполняемых за единицу времени.

Простой пример: имеем легковой автомобиль со скоростью 90 км/ч и вместимостью 4 человека, и автобус со скоростью 60 км/ч и вместимостью 20 человек. Если за операцию принять перемещение 1 человека на 1 километр, то задержка легкового автомобиля — 3600/90=40с — за столько секунд 1 человек преодолеет расстояние в 1 километр, пропускная способность автомобиля — 4/40=0.1 операций/секунду; задержка автобуса — 3600/60=60с, пропускная способность автобуса — 20/60=0.3(3) операций/секунду.

Так вот, CPU — это автомобиль, GPU — автобус: он имеет большую задержку но также и большую пропускную способность. Если для вашей задачи задержка каждой конкретной операции не настолько важна как количество этих операций в секунду — стоит рассмотреть применение GPU.

Базовые понятия и термины CUDA

Итак, разберемся с терминологией CUDA:

- Устройство (device) — GPU. Выполняет роль «подчиненного» — делает только то, что ему говорит CPU.

- Хост (host) — CPU. Выполняет управляющую роль — запускает задачи на устройстве, выделяет память на устройстве, перемещает память на/с устройства. И да, использование CUDA предполагает, что как устройство так и хост имеют свою отдельную память.

- Ядро (kernel) — задача, запускаемая хостом на устройстве.

При использовании CUDA вы просто пишете код на своем любимом языке программирования (список поддерживаемых языков, не учитывая С и С++), после чего компилятор CUDA сгенерирует код отдельно для хоста и отдельно для устройства. Небольшая оговорка: код для устройства должен быть написан только на языке C с некоторыми ‘CUDA-расширениями’.

Основные этапы CUDA-программы

- Хост выделяет нужное количество памяти на устройстве.

- Хост копирует данные из своей памяти в память устройства.

- Хост стартует выполнение определенных ядер на устройстве.

- Устройство выполняет ядра.

- Хост копирует результаты из памяти устройства в свою память.

Естественно, для наибольшей эффективности использования GPU нужно чтобы соотношение времени, потраченного на работу ядер, к времени, потраченному на выделение памяти и перемещение данных, было как можно больше.

Ядра

Рассмотрим более детально процесс написания кода для ядер и их запуска. Важный принцип — ядра пишутся как (практически) обычные последовательные программы — то-есть вы не увидите создания и запуска потоков в коде самих ядер. Вместо этого, для организации параллельных вычислений GPU запустит большое количество копий одного и того же ядра в разных потоках — а точнее, вы сами говорите сколько потоков запустить. И да, возвращаясь к вопросу эффективности использования GPU — чем больше потоков вы запускаете (при условии что все они будут выполнять полезную работу) — тем лучше.

Код для ядер отличается от обычного последовательного кода в таких моментах:

- Внутри ядер вы имеете возможность узнать «идентификатор» или, проще говоря, позицию потока, который сейчас выполняется — используя эту позицию мы добиваемся того, что одно и то же ядро будет работать с разными данными в зависимости от потока, в котором оно запущено. Кстати, такая организация параллельных вычислений называется SIMD (Single Instruction Multiple Data) — когда несколько процессоров выполняют одновременно одну и ту же операцию но на разных данных.

- В некоторых случаях в коде ядра необходимо использовать различные способы синхронизации.

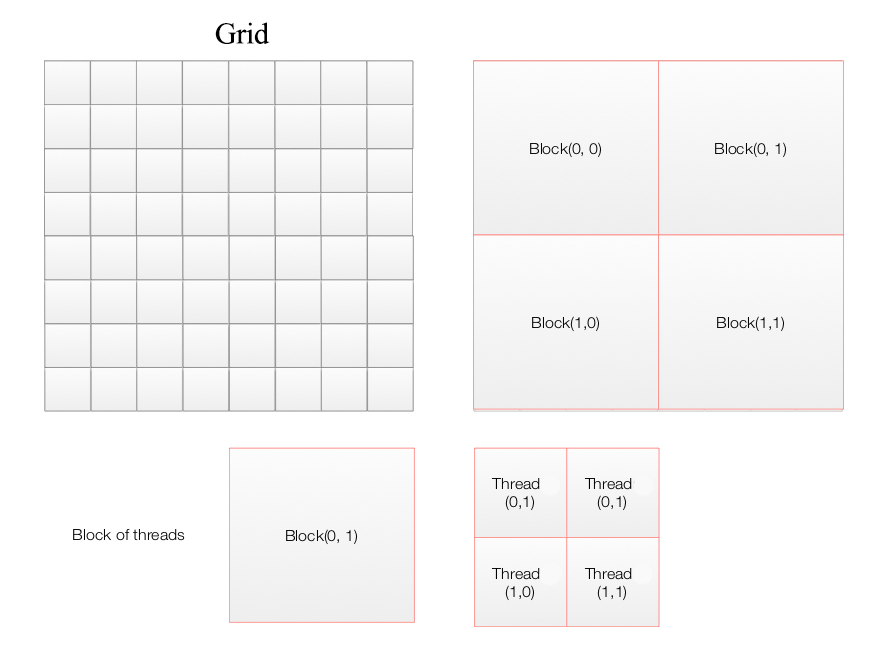

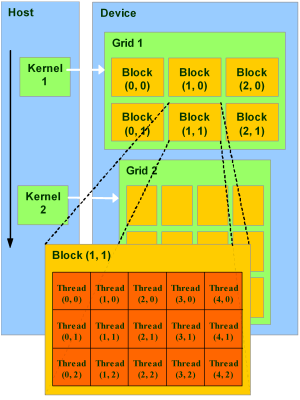

Каким же образом мы задаем количество потоков, в которых будет запущено ядро? Поскольку GPU это все таки Graphics Processing Unit, то это, естественно, повлияло на модель CUDA, а именно на способ задания количества потоков:

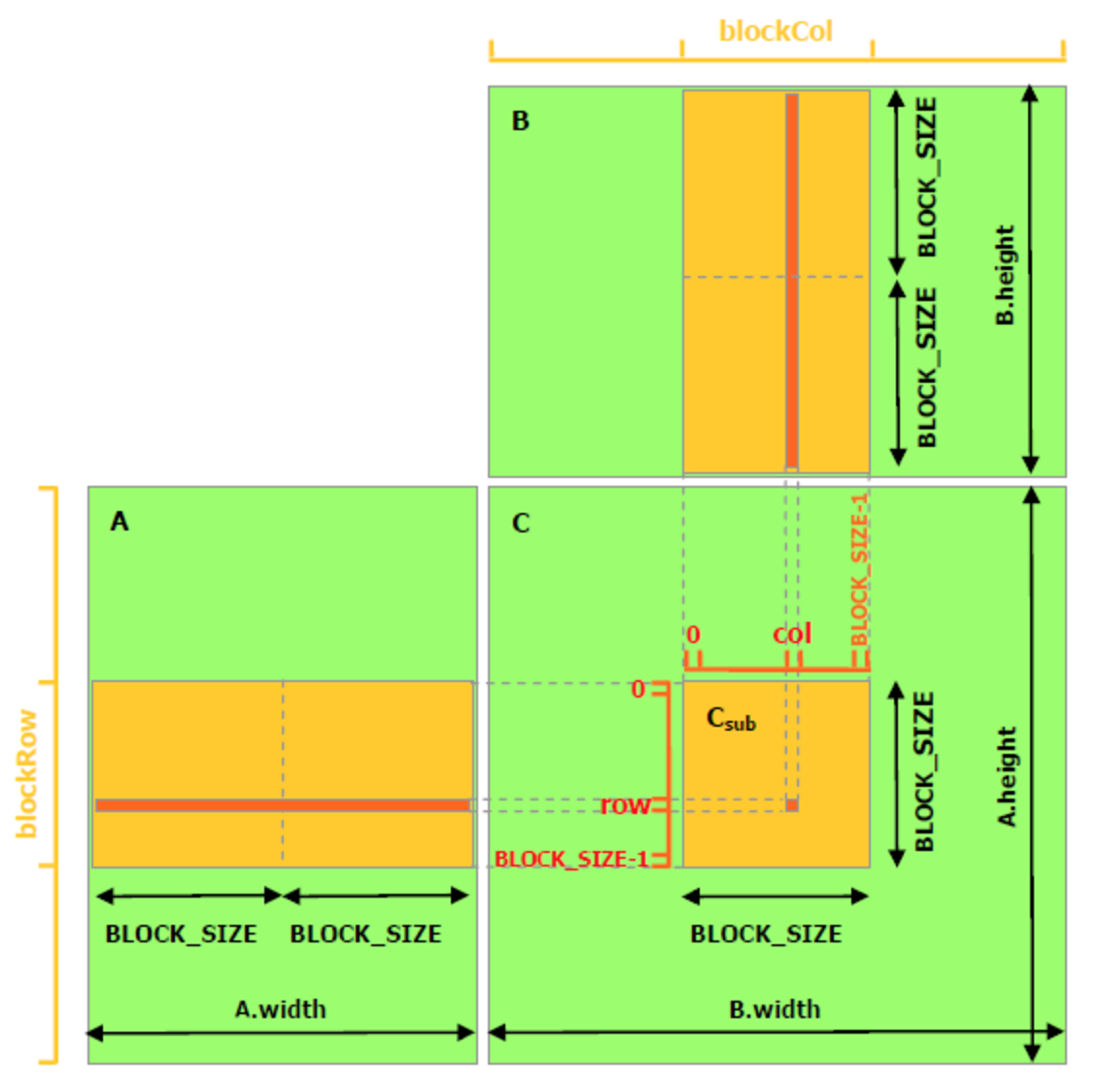

- Сначала задаются размеры так называемой сетки (grid), в 3D координатах: grid_x, grid_y, grid_z. В результате, сетка будет состоять из grid_x*grid_y*grid_z блоков.

- Потом задаются размеры блока в 3D координатах: block_x, block_y, block_z. В результате, блок будет состоять из block_x*block_y*block_z потоков. Итого, имеем grid_x*grid_y*grid_z*block_x*block_y*block_z потоков. Важное замечание — максимальное количество потоков в одном блоке ограничено и зависит от модели GPU — типичны значения 512 (более старые модели) и 1024 (более новые модели).

- Внутри ядра доступны переменные threadIdx и blockIdx с полями x, y, z — они содержат 3D координаты потока в блоке и блока в сетке соответственно. Также доступны переменные blockDim и gridDim с теми же полями — размеры блока и сетки соответственно.

Как видите, данный способ запуска потоков действительно подходит для обработки 2D и 3D изображений: например, если нужно определенным образом обработать каждый пиксел 2D либо 3D изображения, то после выбора размеров блока (в зависимости от размеров картинки, способа обработки и модели GPU) размеры сетки выбираются такими, чтобы было покрыто все изображение, возможно, с избытком — если размеры изображения не делятся нацело на размеры блока.

Пишем первую программу на CUDA

Довольно теории, время писать код. Инструкции по установке и конфигурации CUDA для разных ОС — docs.nvidia.com/cuda/index.html. Также, для простоты работы с файлами изображений будем использовать OpenCV, а для сравнения производительности CPU и GPU — OpenMP.

Задачу поставим довольно простую: конвертация цветного изображения в оттенки серого. Для этого, яркость пиксела pix в серой шкале считается по формуле: Y = 0.299*pix.R + 0.587*pix.G + 0.114*pix.B.

Сначала напишем скелет программы:

main.cpp

#include <chrono>

#include <iostream>

#include <cstring>

#include <string>

#include <opencv2/core/core.hpp>

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/opencv.hpp>

#include <vector_types.h>

#include "openMP.hpp"

#include "CUDA_wrappers.hpp"

#include "common/image_helpers.hpp"

using namespace cv;

using namespace std;

int main( int argc, char** argv )

{

using namespace std::chrono;

if( argc != 2)

{

cout <<" Usage: convert_to_grayscale imagefile" << endl;

return -1;

}

Mat image, imageGray;

uchar4 *imageArray;

unsigned char *imageGrayArray;

prepareImagePointers(argv[1], image, &imageArray, imageGray, &imageGrayArray, CV_8UC1);

int numRows = image.rows, numCols = image.cols;

auto start = system_clock::now();

RGBtoGrayscaleOpenMP(imageArray, imageGrayArray, numRows, numCols);

auto duration = duration_cast<milliseconds>(system_clock::now() - start);

cout<<"OpenMP time (ms):" << duration.count() << endl;

memset(imageGrayArray, 0, sizeof(unsigned char)*numRows*numCols);

RGBtoGrayscaleCUDA(imageArray, imageGrayArray, numRows, numCols);

return 0;

}

Тут все довольно очевидно — читаем файл с изображением, подготавливаем указатели на цветное и в оттенках серого изображение, запускаем вариант

с OpenMP и вариант с CUDA, замеряем время. Функция prepareImagePointers имеет следующий вид:

prepareImagePointers

template <class T1, class T2>

void prepareImagePointers(const char * const inputImageFileName,

cv::Mat& inputImage,

T1** inputImageArray,

cv::Mat& outputImage,

T2** outputImageArray,

const int outputImageType)

{

using namespace std;

using namespace cv;

inputImage = imread(inputImageFileName, IMREAD_COLOR);

if (inputImage.empty())

{

cerr << "Couldn't open input file." << endl;

exit(1);

}

//allocate memory for the output

outputImage.create(inputImage.rows, inputImage.cols, outputImageType);

cvtColor(inputImage, inputImage, cv::COLOR_BGR2BGRA);

*inputImageArray = (T1*)inputImage.ptr<char>(0);

*outputImageArray = (T2*)outputImage.ptr<char>(0);

}

Я пошел на небольшую хитрость: дело в том, что мы выполняем очень мало работы на каждый пиксел изображения — то-есть при варианте с CUDA встает упомянутая выше проблема соотношения времени выполнения полезных операций к времени выделения памяти и копирования данных, и в результате общее время CUDA варианта будет больше OpenMP варианта, а мы же хотим показать что CUDA быстрее:) Поэтому для CUDA будет измеряться только время, потраченное на выполнение собственно конвертации изображения — без учета операций с памятью. В свое оправдание скажу, что для большого класса задач время полезной работы будет все-таки доминировать, и CUDA будет быстрее даже с учетом операций с памятью.

Далее напишем код для OpenMP варианта:

openMP.hpp

#include <stdio.h>

#include <omp.h>

#include <vector_types.h>

void RGBtoGrayscaleOpenMP(uchar4 *imageArray, unsigned char *imageGrayArray, int numRows, int numCols)

{

#pragma omp parallel for collapse(2)

for (int i = 0; i < numRows; ++i)

{

for (int j = 0; j < numCols; ++j)

{

const uchar4 pixel = imageArray[i*numCols+j];

imageGrayArray[i*numCols+j] = 0.299f*pixel.x + 0.587f*pixel.y+0.114f*pixel.z;

}

}

}

Все довольно прямолинейно — мы всего лишь добавили директиву omp parallel for к однопоточному коду — в этом вся красота и мощь OpenMP. Я пробовал поиграться с параметром schedule, но получалось только хуже, чем без него.

Наконец, переходим к CUDA. Тут распишем более детально. Сначала нужно выделить память под входные данные, переместить их с CPU на GPU и выделить память под выходные данные:

Скрытый текст

void RGBtoGrayscaleCUDA(const uchar4 * const h_imageRGBA, unsigned char* const h_imageGray, size_t numRows, size_t numCols)

{

uchar4 *d_imageRGBA;

unsigned char *d_imageGray;

const size_t numPixels = numRows * numCols;

cudaSetDevice(0);

checkCudaErrors(cudaGetLastError());

//allocate memory on the device for both input and output

checkCudaErrors(cudaMalloc(&d_imageRGBA, sizeof(uchar4) * numPixels));

checkCudaErrors(cudaMalloc(&d_imageGray, sizeof(unsigned char) * numPixels));

//copy input array to the GPU

checkCudaErrors(cudaMemcpy(d_imageRGBA, h_imageRGBA, sizeof(uchar4) * numPixels, cudaMemcpyHostToDevice));

Стоит обратить внимание на стандарт именования переменных в CUDA — данные на CPU начинаются с h_ (host), данные да GPU — с d_ (device). checkCudaErrors — макрос, взят с github-репозитория Udacity курса. Имеет следующий вид:

Скрытый текст

#include <cuda.h>

#define checkCudaErrors(val) check( (val), #val, __FILE__, __LINE__)

template<typename T>

void check(T err, const char* const func, const char* const file, const int line) {

if (err != cudaSuccess) {

std::cerr << "CUDA error at: " << file << ":" << line << std::endl;

std::cerr << cudaGetErrorString(err) << " " << func << std::endl;

exit(1);

}

}

cudaMalloc — аналог malloc для GPU, cudaMemcpy — аналог memcpy, имеет дополнительный параметр в виде enum-а, который указывает тип копирования: cudaMemcpyHostToDevice, cudaMemcpyDeviceToHost, cudaMemcpyDeviceToDevice.

Далее необходимо задать размеры сетки и блока и вызвать ядро, не забыв измерить время:

Скрытый текст

dim3 blockSize;

dim3 gridSize;

int threadNum;

cudaEvent_t start, stop;

cudaEventCreate(&start);

cudaEventCreate(&stop);

threadNum = 1024;

blockSize = dim3(threadNum, 1, 1);

gridSize = dim3(numCols/threadNum+1, numRows, 1);

cudaEventRecord(start);

rgba_to_grayscale_simple<<<gridSize, blockSize>>>(d_imageRGBA, d_imageGray, numRows, numCols);

cudaEventRecord(stop);

cudaEventSynchronize(stop);

cudaDeviceSynchronize(); checkCudaErrors(cudaGetLastError());

float milliseconds = 0;

cudaEventElapsedTime(&milliseconds, start, stop);

std::cout << "CUDA time simple (ms): " << milliseconds << std::endl;

Обратите внимание на формат вызова ядра — kernel_name<<<gridSize, blockSize>>>. Код самого ядра также не очень сложный:

rgba_to_grayscale_simple

__global__

void rgba_to_grayscale_simple(const uchar4* const d_imageRGBA,

unsigned char* const d_imageGray,

int numRows, int numCols)

{

int y = blockDim.y*blockIdx.y + threadIdx.y;

int x = blockDim.x*blockIdx.x + threadIdx.x;

if (x>=numCols || y>=numRows)

return;

const int offset = y*numCols+x;

const uchar4 pixel = d_imageRGBA[offset];

d_imageGray[offset] = 0.299f*pixel.x + 0.587f*pixel.y+0.114f*pixel.z;

}

Здесь мы вычисляем координаты y и x обрабатываемого пиксела, используя ранее описанные переменные threadIdx, blockIdx и blockDim, ну и выполняем конвертацию. Обратите внимание на проверку if (x>=numCols || y>=numRows) — так как размеры изображения не обязательно будут делится нацело на размеры блоков, некоторые блоки могут «выходить за рамки» изображения — поэтому необходима эта проверка. Также, функция ядра должна помечаться спецификатором __global__ .

Последний шаг — cкопировать результат назад с GPU на CPU и освободить выделенную память:

Скрытый текст

checkCudaErrors(cudaMemcpy(h_imageGray, d_imageGray, sizeof(unsigned char) * numPixels, cudaMemcpyDeviceToHost));

cudaFree(d_imageGray);

cudaFree(d_imageRGBA);

Кстати, CUDA позволяет использовать C++ компилятор для host-кода — так что запросто можно написать обертки для автоматического освобождения памяти.

Итак, запускаем, измеряем (размер входного изображения — 10,109 × 4,542):

OpenMP time (ms):45

CUDA time simple (ms): 43.1941

Конфигурация машины, на которой проводились тесты:

Скрытый текст

Процессор: Intel® Core(TM) i7-3615QM CPU @ 2.30GHz.

GPU: NVIDIA GeForce GT 650M, 1024 MB, 900 MHz.

RAM: DD3, 2x4GB, 1600 MHz.

OS: OS X 10.9.5.

Компилятор: g++ (GCC) 4.9.2 20141029.

CUDA компилятор: Cuda compilation tools, release 6.0, V6.0.1.

Поддерживаемая версия OpenMP: OpenMP 4.0.

Получилось как-то не очень впечатляюще:) А проблема все та же — слишком мало работы выполняется над каждым пикселом — мы запускаем тысячи потоков, каждый из которых отрабатывает практически моментально. В случае с CPU такой проблемы не возникает — OpenMP запустит сравнительно малое количество потоков (8 в моем случае) и разделит работу между ними поровну — таким образом процессоры будет занят практически на все 100%, в то время как с GPU мы, по сути, не используем всю его мощь. Решение довольно очевидное — обрабатывать несколько пикселов в ядре. Новое, оптимизированное, ядро будет выглядеть следующим образом:

rgba_to_grayscale_optimized

#define WARP_SIZE 32

__global__

void rgba_to_grayscale_optimized(const uchar4* const d_imageRGBA,

unsigned char* const d_imageGray,

int numRows, int numCols,

int elemsPerThread)

{

int y = blockDim.y*blockIdx.y + threadIdx.y;

int x = blockDim.x*blockIdx.x + threadIdx.x;

const int loop_start = (x/WARP_SIZE * WARP_SIZE)*(elemsPerThread-1)+x;

for (int i=loop_start, j=0; j<elemsPerThread && i<numCols; i+=WARP_SIZE, ++j)

{

const int offset = y*numCols+i;

const uchar4 pixel = d_imageRGBA[offset];

d_imageGray[offset] = 0.299f*pixel.x + 0.587f*pixel.y+0.114f*pixel.z;

}

}

Здесь не все так просто как с предыдущим ядром. Если разобраться, теперь каждый поток будет обрабатывать elemsPerThread пикселов, причем не подряд, а с расстоянием в WARP_SIZE между ними. Что такое WARP_SIZE, почему оно равно 32, и зачем обрабатывать пиксели пободным образом, будет более детально рассказано в следующих частях, сейчас только скажу что этим мы добиваемся более эффективной работы с памятью. Каждый поток теперь обрабатывает elemsPerThread пикселов с расстоянием в WARP_SIZE между ними, поэтому x-координата первого пиксела для этого потока исходя из его позиции в блоке теперь рассчитывается по несколько более сложной формуле чем раньше.

Запускается это ядро следующим образом:

Скрытый текст

threadNum=128;

const int elemsPerThread = 16;

blockSize = dim3(threadNum, 1, 1);

gridSize = dim3(numCols / (threadNum*elemsPerThread) + 1, numRows, 1);

cudaEventRecord(start);

rgba_to_grayscale_optimized<<<gridSize, blockSize>>>(d_imageRGBA, d_imageGray, numRows, numCols, elemsPerThread);

cudaEventRecord(stop);

cudaEventSynchronize(stop);

cudaDeviceSynchronize(); checkCudaErrors(cudaGetLastError());

milliseconds = 0;

cudaEventElapsedTime(&milliseconds, start, stop);

std::cout << "CUDA time optimized (ms): " << milliseconds << std::endl;

Количество блоков по x-координате теперь рассчитывается как numCols / (threadNum*elemsPerThread) + 1 вместо numCols / threadNum + 1. В остальном все осталось так же.

Запускаем:

OpenMP time (ms):44

CUDA time simple (ms): 53.1625

CUDA time optimized (ms): 15.9273

Получили прирост по скорости в 2.76 раза (опять же, не учитывая время на операции с памятью) — для такой простой проблемы это довольно неплохо. Да-да, эта задача слишком простая — с ней достаточно хорошо справляется и CPU. Как видно из второго теста, простая реализация на GPU может даже проигрывать по скорости реализации на CPU.

На сегодня все, в следующей части рассмотрим аппаратное обеспечение GPU и основные шаблоны параллельной коммуникации.

Весь исходный код доступен на bitbucket.

CUDA Programming

The goal of this document is only to make it easier for new developpers to undestand the overall CUDA paradigm and NVIDIA GPU features.

This document is just a high level overview of CUDA features and CUDA programming model. It is probably simpler to read it before installing the CUDA Toolkit, reading the CUDA C Programming guide, CUDA Runtime API and code samples.

For a complete and deep overview of the CUDA Toolkit and CUDA Runtime API and CUDA Libraries, please consult NVIDIA websites:

- The CUDA Zone:

- https://developer.nvidia.com/cuda-zone

- Join The CUDA Registered Developer Program:

- https://developer.nvidia.com/registered-developer-program

- Information about the CUDA Toolkit:

- https://developer.nvidia.com/cuda-toolkit

- Download the latest CUDA Toolkit (Mac OSX / Linux x86 / Linux ARM / Windows):

- https://developer.nvidia.com/cuda-downloads

Table of contents

- Introduction

- Terminology

- CUDA Software Architecture

- Versioning and Compatibility

- Thread Hierarchy

- Kernel

- Thread

- Warp

- Block

- Grid

- Memory

- Host Memory

- Device Memory

- Unified Memory

- Asynchronous Concurrency Execution

- [Concurrent Data Access](#Concurrent Data Access)

- Threads Synchronization

- Atomic Functions

- Concurrent Execution between Host and Device

- Concurrent Data Transfers

- Concurrent Kernel Execution

- Overlap of Data Transfer and Kernel Execution

- Streams

- Callbacks

- Events

- Dynamic Parallelism

- Hyper-Q

- [Concurrent Data Access](#Concurrent Data Access)

- Multi-Device System

- Stream and Event Behavior

- GPU Direct

Annexes

- CUDA Libraries

- cuBLAS

- cuSPARSE

- cuSOLVER

- cuFFT

- cuRAND

- cuDNN

- NPP

- Atomic Functions

Introduction

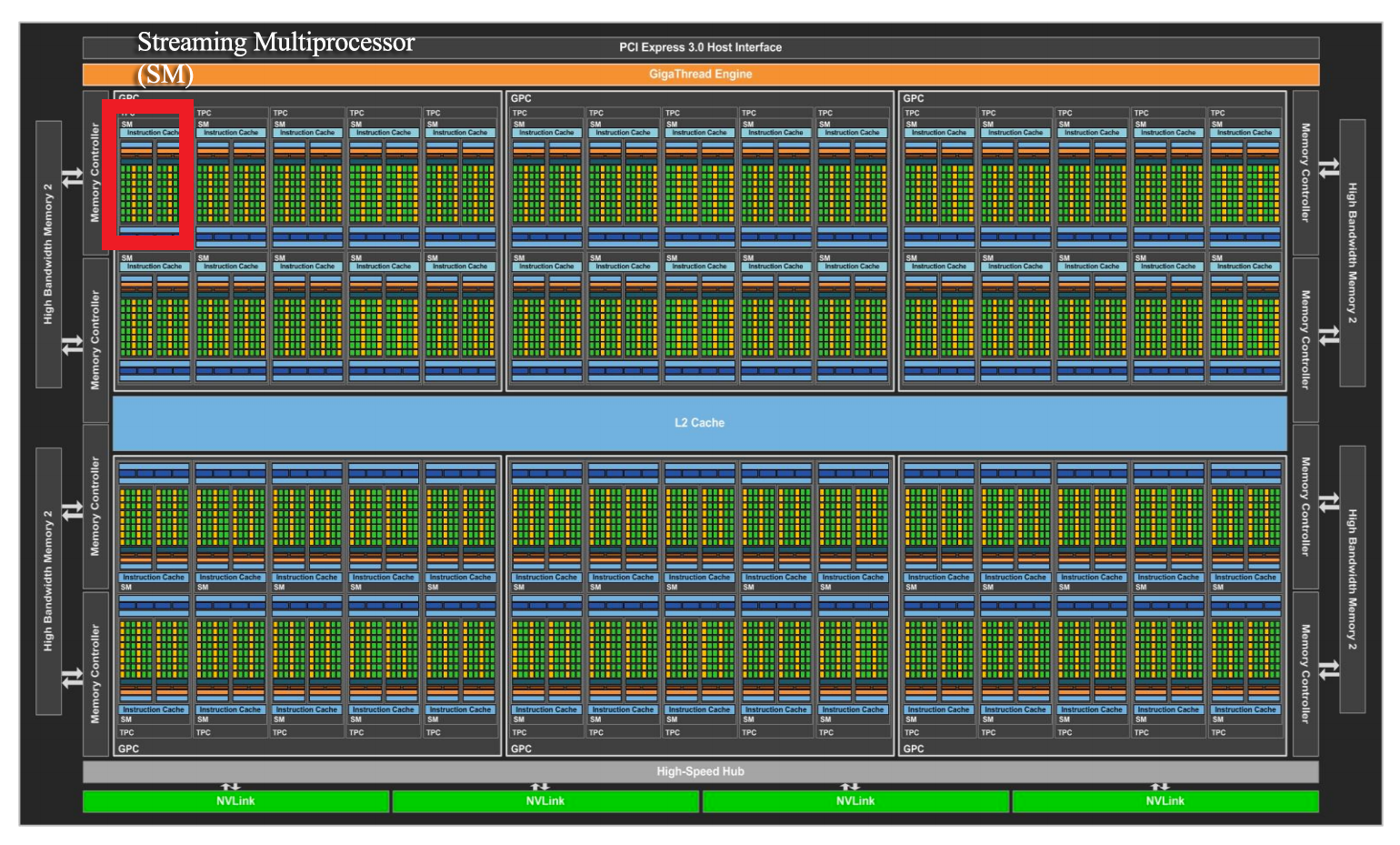

GPU is specialized for compute-intensive, highly parallel computation — exactly what graphics rendering is about — and therefore designed such that more transistors are devoted to data processing rather than data caching and flow control.

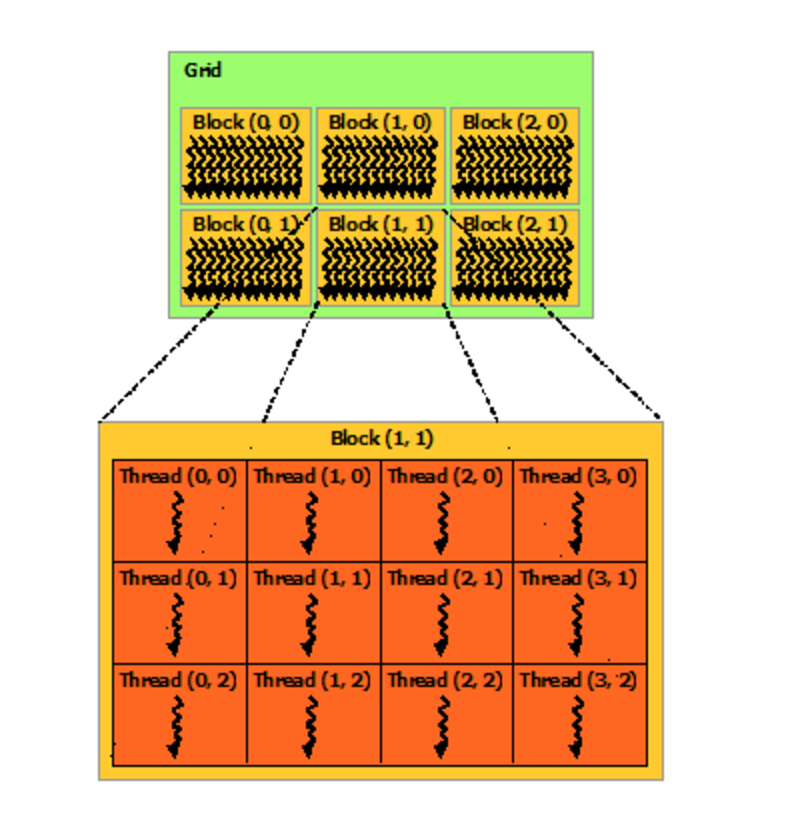

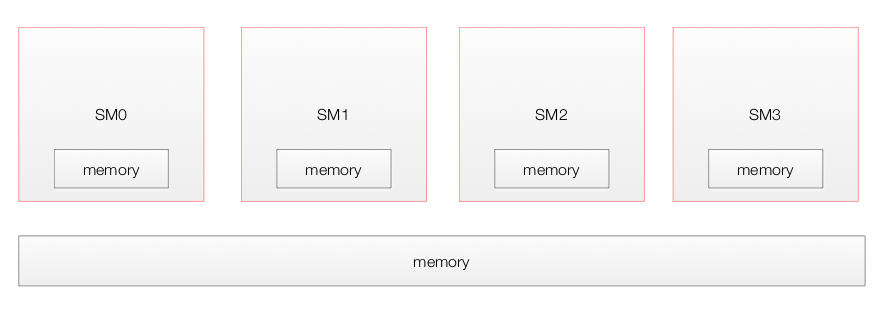

The NVIDIA GPU architecture is built around a scalable array of multithreaded Streaming Multiprocessors (SMs). When a CUDA program on the host CPU invokes a kernel grid, the blocks of the grid are enumerated and distributed to multiprocessors with available execution capacity. The threads of a thread block execute concurrently on one multiprocessor, and multiple thread blocks can execute concurrently on one multiprocessor. As thread blocks terminate, new blocks are launched on the vacated multiprocessors.

A multiprocessor is designed to execute hundreds of threads concurrently. To manage such a large amount of threads, it employs a unique architecture called SIMT (Single-Instruction, Multiple-Thread). The instructions are pipelined to leverage instruction-level parallelism within a single thread, as well as thread-level parallelism extensively through simultaneous hardware multithreading. Unlike CPU cores they are issued in order however and there is no branch prediction and no speculative execution.

Terminology

-

HOST: The CPU and its memory (Host Memory)

-

DEVICE: The GPU and its memory (Device Memory)

-

COMPUTE CAPABILITY: The compute capability of a device is represented by a version number, also sometimes called its «SM version». This version number identifies the features supported by the GPU hardware and is used by applications at runtime to determine which hardware features and/or instructions are available on the present GPU.

- The compute capability version comprises a major and a minor version number (x.y):

- Devices with the same major revision number are of the same core architecture:

- 1 = Telsa architecture

- 2 = Fermi architecture

- 3 = Kepler architecture

- 5 = Maxwell architecture

- The minor revision number corresponds to an incremental improvement to the core architecture, possibly including new features.

- Devices with the same major revision number are of the same core architecture:

- The compute capability version comprises a major and a minor version number (x.y):

Notes:

- The compute capability version of a particular GPU should not be confused with the CUDA version (e.g., CUDA 5.5, CUDA 6, CUDA 6.5, CUDA 7.0), which is the version of the CUDA software platform.

- The Tesla architecture (Compute Capability 1.x) is no longer supported starting with CUDA 7.0.

| Architecture specifications Compute capability (version) | 1.0 | 1.1 | 1.2 | 1.3 | 2.0 | 2.1 | 3.0 | 3.5 | 5.0 | 5.2 |

|---|---|---|---|---|---|---|---|---|---|---|

| Number of cores for integer and floating-point arithmetic functions operations | 8 | 8 | 8 | 8 | 32 | 48 | 192 | 192 | 128 | 128 |

|

MacBook Pro 15′ NVIDIA GeForce GT 750M 2 Giga 384 cores with 2 GB |

|

NVIDIA Tesla GPU Accelerator K40

2.880 cores with 12 GB |

CUDA Software Architecture

CUDA is composed of two APIs:

- A low-level API called the CUDA Driver API,

- A higher-level API called the CUDA Runtime API that is implemented on top of the CUDA driver API.

These APIs are mutually exclusive: An application should use either one or the other.

- The CUDA runtime eases device code management by providing implicit initialization, context management, and module management.

- In contrast, the CUDA driver API requires more code, is harder to program and debug, but offers a better level of control and is language-independent since it only deals with cubin objects. In particular, it is more difficult to configure and launch kernels using the CUDA driver API, since the execution configuration and kernel parameters must be specified with explicit function calls. Also, device emulation does not work with the CUDA driver API.

The easiest way to write an application that use GPU acceleration is to use existing CUDA libraries if they correspond to your need: cuBLAS, cuRAND, cu FFT, cuSOLVER, cuDNN, …

If existing libraries do not fit your need then you must write your own GPU functions in C language ( CUDA call them kernels, and CUDA C program have «.cu» file extension ) and use them in your application by using CUDA Runtime API, and linking CUDA Runtime library and your kernels functions to your application.

Versioning and Compatibility

-

The driver API is backward compatible, meaning that applications, plug-ins, and libraries (including the C runtime) compiled against a particular version of the driver API will continue to work on subsequent device driver releases.

-

The driver API is not forward compatible, which means that applications, plug-ins, and libraries (including the C runtime) compiled against a particular version of the driver API will not work on previous versions of the device driver.

-

Only one version of the CUDA device driver can be installed on a system.

-

All plug-ins and libraries used by an application must use the same version of:

- any CUDA libraries (such as cuFFT, cuBLAS, …)

- the associated CUDA runtime.

Thread Hierarchy

CUDA C extends C by allowing the programmer to define C functions, called kernels, that, when called, are executed N times in parallel by N different CUDA threads, as opposed to only once like regular C functions.

The CUDA programming model assumes that the CUDA threads execute on a physically separate device that operates as a coprocessor to the host running the C program. This is the case, for example, when the kernels execute on a GPU and the rest of the Golang program executes on a CPU.

Calling a kernel function from the Host launch a grid of thread blocks on the Device:

Kernel

A kernel is a special C function defined using the __global__ declaration specifier and the number of CUDA threads that execute that kernel for a given kernel call is specified using a new <<<...>>> execution configuration syntax. Each thread that executes the kernel is given a unique thread ID that is accessible within the kernel through the built-in threadIdx variable.

The following sample code adds two vectors A and B of size 16 and stores the result into vector C:

// Kernel definition __global__ void VecAdd(float* A, float* B, float* C) { int i = threadIdx.x; C[i] = A[i] + B[i]; } int main() { ... // Kernel invocation of 1 Block with 16 threads VecAdd<<< 1, 16 >>>(A, B, C); ... }

Here, each of the 16 threads that execute VecAdd() performs one pair-wise addition:

Thread

For convenience, threadIdx is a 3-component vector, so that threads can be identified using a one-dimensional, two-dimensional, or three-dimensional thread index, forming a one-dimensional, two-dimensional, or three-dimensional thread block. This provides a natural way to invoke computation across the elements in a domain such as a vector, matrix, or volume.

The index of a thread and its thread ID relate to each other in a straightforward way:

- For a one-dimensional block of size (Dx), the thread ID of a thread of index (x) are the same:

= x

- For a two-dimensional block of size (Dx, Dy), the thread ID of a thread of index (x, y) is:

= x + y*Dx

- For a three-dimensional block of size (Dx, Dy, Dz), the thread ID of a thread of index (x, y, z) is:

= x + y*Dx + z Dx*Dy.

Note: Even if the programmer want to use 1, 2 or 3 dimensions for his data representation, Memory is still a 1 dimension vector at the end …

The following code adds two matrices A and B of size NxN and stores the result into matrix C:

// Kernel definition __global__ void MatAdd(float A[N][N], float B[N][N], float C[N][N]) { int i = threadIdx.x; int j = threadIdx.y; C[i][j] = A[i][j] + B[i][j]; } int main() { ... // Kernel invocation with one block of N * N * 1 threads dim3 threadsPerBlock(N, N); MatAdd<<< 1, threadsPerBlock >>>(A, B, C); ... }

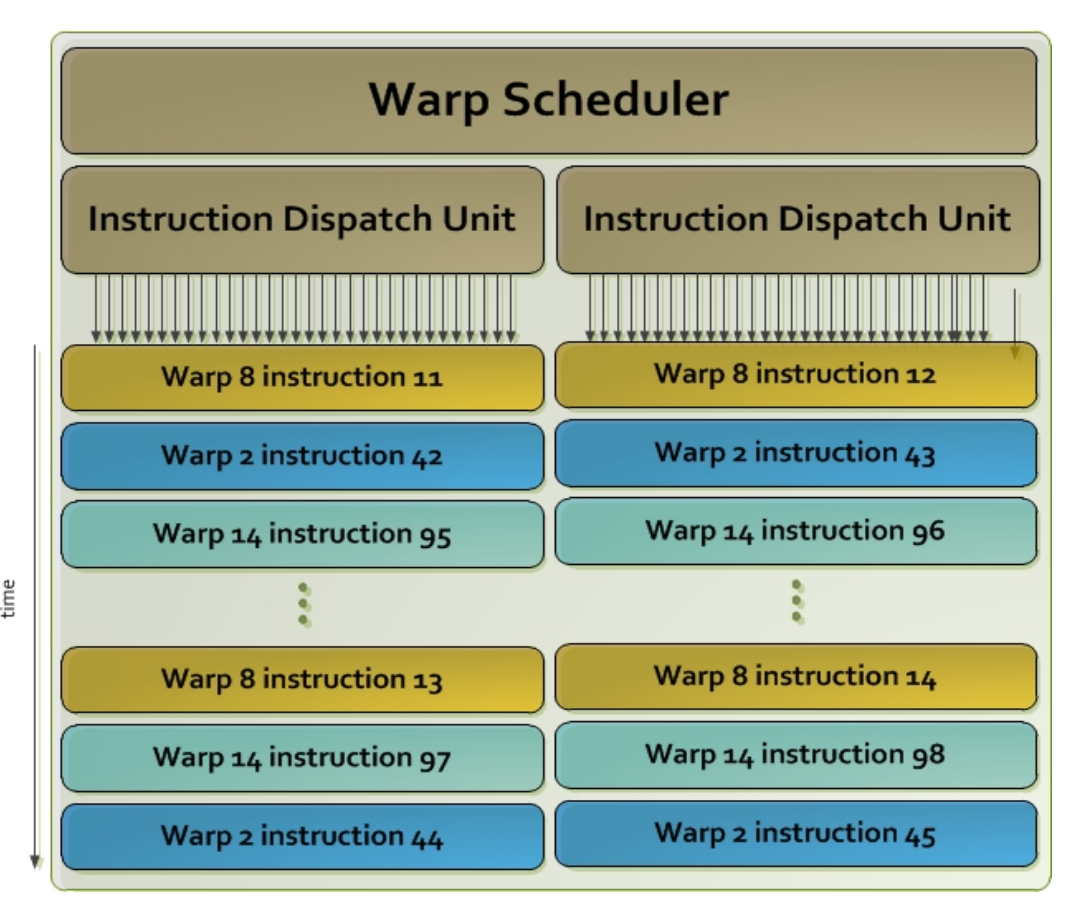

Warp

The multiprocessor creates, manages, schedules, and executes threads in groups of 32 parallel threads called warps. Individual threads composing a warp start together at the same program address, but they have their own instruction address counter and register state and are therefore free to branch and execute independently. The term warp originates from weaving, the first parallel thread technology.

When a multiprocessor is given one or more thread blocks to execute, it partitions them into warps and each warp gets scheduled by a warp scheduler for execution. The way a block is partitioned into warps is always the same; each warp contains threads of consecutive, increasing thread IDs with the first warp containing thread 0.

A warp executes one common instruction at a time, so full efficiency is realized when all 32 threads of a warp agree on their execution path. If threads of a warp diverge via a data-dependent conditional branch, the warp serially executes each branch path taken, disabling threads that are not on that path, and when all paths complete, the threads converge back to the same execution path. Branch divergence occurs only within a warp; different warps execute independently regardless of whether they are executing common or disjoint code paths.

The threads of a warp that are on that warp’s current execution path are called the active threads, whereas threads not on the current path are inactive (disabled). Threads can be inactive because they have exited earlier than other threads of their warp, or because they are on a different branch path than the branch path currently executed by the warp, or because they are the last threads of a block whose number of threads is not a multiple of the warp size.

Block

Blocks are organized into a one-dimensional, two-dimensional, or three-dimensional grid of thread blocks.

The number of threads per block and the number of blocks per grid specified in the <<<...>>> syntax can be of type int or dim3. Two-dimensional blocks or grids can be specified as in the example above.

- Each block within the grid can be identified by a one-dimensional, two-dimensional, or three-dimensional index accessible within the kernel through the built-in

blockIdxvariable. - The dimension of the thread block is accessible within the kernel through the built-in

blockDimvariable.

// Kernel definition __global__ void fooKernel( ... ) { unsigned int x = blockIdx.x * blockDim.x + threadIdx.x; unsigned int y = blockIdx.y * blockDim.y + threadIdx.y; } int main() { ... // Kernel invocation with 3x2 blocks of 4x3 threads dim3 dimGrid(3, 2, 1); dim3 dimBlock(4, 3, 1); fooKernel<<< dimGrid, dimBlock >>>( ... ); ... }

Consequence of fooKernel<<< dimGrid, dimBlock >>> invocation is the launch of a Grid consisting of 3×2 Blocks with 4×3 Threads in each block:

Grid

There is a limit to the number of threads per block, since all threads of a block are expected to reside on the same processor core and must share the limited memory resources of that core. On current GPUs, a thread block may contain up to 1024 threads.

However, a kernel can be executed by multiple equally-shaped thread blocks, so that the total number of threads is equal to the number of threads per block times the number of blocks.

The number of thread blocks in a grid is usually dictated by the size of the data being processed or the number of processors in the system, which it can greatly exceed.

Automatic Scalability

Thread blocks are required to execute independently: It must be possible to execute them in any order, in parallel or in series. This independence requirement allows thread blocks to be scheduled in any order across any number of cores, enabling programmers to write code that scales with the number of cores.

A GPU is built around an array of Streaming Multiprocessors (SMs). A Grid is partitioned into blocks of threads that execute independently from each other, so that a GPU with more multiprocessors will automatically execute the Grid in less time than a GPU with fewer multiprocessors.

| Technical Specifications per Compute Capability | 1.1 | 1.2 — 1.3 | 2.x | 3.0 — 3.2 | 3.5 | 3.7 | 5.0 | 5.2 |

|---|---|---|---|---|---|---|---|---|

| Grid of thread blocks | ||||||||

| Maximum dimensionality of a grid | 2 | 2 | 3 | 3 | 3 | 3 | 3 | 3 |

| Maximum x-dimension of a grid | 65535 | 65535 | 65535 | 2^31-1 | 2^31-1 | 2^31-1 | 2^31-1 | 2^31-1 |

| Maximum y- or z-dimension of a grid | 65535 | 65535 | 65535 | 65535 | 65535 | 65535 | 65535 | 65535 |

| Thread Block | ||||||||

| Maximum dimensionality of a block | 3 | 3 | 3 | 3 | 3 | 3 | 3 | 3 |

| Maximum x- or y-dimension of a block | 512 | 512 | 1024 | 1024 | 1024 | 1024 | 1024 | 1024 |

| Maximum z-dimension of a block | 64 | 64 | 64 | 64 | 64 | 64 | 64 | 64 |

| Maximum number of threads per block | 512 | 512 | 1024 | 1024 | 1 024 | 1.024 | 1 024 | 1024 |

| Per Multiprocessor | ||||||||

| Warp size | 32 | 32 | 32 | 32 | 32 | 32 | 32 | 32 |

| Maximum number of resident blocks | 8 | 8 | 8 | 16 | 16 | 16 | 32 | 32 |

| Maximum number of resident warps | 24 | 32 | 48 | 64 | 64 | 64 | 64 | 64 |

| Maximum number of resident threads | 768 | 1024 | 1536 | 2048 | 2048 | 2048 | 2048 | 2048 |

Memory

The GPU card contains its own DRAM memory separatly from the CPU’s DRAM.

- A typical CUDA compute job begins by copying data to the GPU, usually to global memory.

- The GPU then asynchronously runs the compute task it has been assigned.

- When the host makes a call to copy memory back to host memory, the call will block until all threads have completed and the data is available, at which point results are sent back.

Host Memory

- Page-Locked Host Memory (Pinned Memory)

The CUDA runtime provides functions to allow the use of page-locked (also known as pinned) host memory (as opposed to regular pageable host memory):

Using page-locked host memory has several benefits:

- Copies between page-locked host memory and device memory can be performed concurrently with kernel execution for some devices.

- On some devices, page-locked host memory can be mapped into the address space of the device, eliminating the need to copy it to or from device memory.

- On systems with a front-side bus, bandwidth between host memory and device memory is higher if host memory is allocated as page-locked and even higher if in addition it is allocated as write-combining.

Device Memory

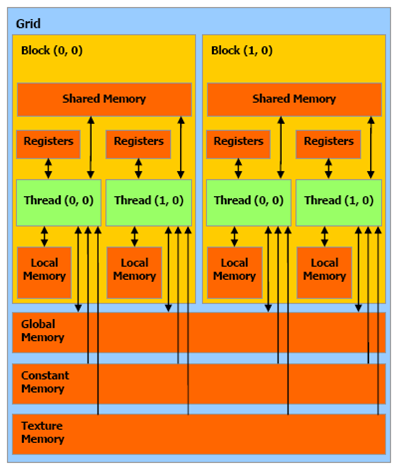

CUDA threads may access data from multiple memory spaces during their execution:

- Local memory

- Each thread has private local memory (per-thread local memory).

- Local memory is so named because its scope is local to the thread, not because of its physical location. In fact, local memory is off-chip (=DRAM). Hence, access to local memory is as expensive as access to global memory. In other words, the term local in the name does not imply faster access.

- Shared memory

- Each thread block has shared memory visible to all threads of the block and with the same lifetime as the block (per-block shared memory).

- Because it is on-chip, shared memory has much higher bandwidth and lower latency than local and global memory.

- Global memory

- All threads have access to the same global memory (=DRAM).

| Technical Specifications per Compute Capability | 1.1 | 1.2 — 1.3 | 2.x | 3.0 — 3.2 | 3.5 | 3.7 | 5.0 | 5.2 |

|---|---|---|---|---|---|---|---|---|

| Registers | ||||||||

| Number of 32-bit registers per multiprocessor | 8k | 16k | 32k | 64k | 64k | 128k | 64k | 64k |

| Maximum number of 32-bit registers per thread block | 32k | 64k | 64k | 64k | 64k | 64k | ||

| Maximum number of 32-bit registers per thread | 128 | 128 | 63 | 63 | 255 | 255 | 255 | 255 |

| Local Memory | ||||||||

| Maximum amount of local memory per thread | 16 KB | 16 KB | 512 KB | 512 KB | 512 KB | 512 KB | 512 KB | 512 KB |

| Shared Memory | ||||||||

| Maximum amount of shared memory per multiprocessor | 16 KB | 16 KB | 48 KB | 48 KB | 48 KB | 112 KB | 64 KB | 96 KB |

| Maximum amount of shared memory per thread block | 16 KB | 16 KB | 48 KB | 48 KB | 48 KB | 48 KB | 48 KB | 48 KB |

Unified Memory

Data that is shared between the CPU and GPU must be allocated in both memories, and explicitly copied between them by the program. This adds a lot of complexity to CUDA programs.

Unified Memory creates a pool of managed memory that is shared between the CPU and GPU, bridging the CPU-GPU divide. Managed memory is accessible to both the CPU and GPU using a single pointer. The key is that the system automatically migrates data allocated in Unified Memory between host and device so that it looks like CPU memory to code running on the CPU, and like GPU memory to code running on the GPU.

The CUDA runtime hides all the complexity, automatically migrating data to the place where it is accessed.

An important point is that a carefully tuned CUDA program that uses streams and cudaMemcpyAsync to efficiently overlap execution with data transfers may very well perform better than a CUDA program that only uses Unified Memory. Understandably so: the CUDA runtime never has as much information as the programmer does about where data is needed and when! CUDA programmers still have access to explicit device memory allocation and asynchronous memory copies to optimize data management and CPU-GPU concurrency.

Unified Memory is first and foremost a productivity feature that provides a smoother on-ramp to parallel computing, without taking away any of CUDA’s features for power users.

Asynchronous Concurrency Execution

Concurrent Data Access

Threads Synchronization

Threads can access each other’s results through shared and global memory: they can work together. What if a thread reads a result before another thread writes it ? Threads need to synchronize.

Thread blocks are required to execute independently: It must be possible to execute them in any order, in parallel or in series. This independence requirement allows thread blocks to be scheduled in any order across any number of cores, enabling programmers to write code that scales with the number of cores.

But threads within a block can cooperate by sharing data through some shared memory and by synchronizing their execution to coordinate memory accesses. More precisely, one can specify synchronization points in the kernel by calling the __syncthreads() intrinsic function. __syncthreads() acts as a barrier at which all threads in the block must wait before any is allowed to proceed.

The __syncthreads() command is a block level synchronization barrier. That means is safe to be used when all threads in a block reach the barrier.

Atomic Functions

An atomic function performs a read-modify-write atomic operation on one 32-bit or 64-bit word residing in global or shared memory. For example, atomicAdd() reads a word at some address in global or shared memory, adds a number to it, and writes the result back to the same address. The operation is atomic in the sense that it is guaranteed to be performed without interference from other threads: no other thread can access this address until the operation is complete.

- Atomic functions can only be used in device functions.

- Atomic functions operating on mapped page-locked memory (Mapped Memory) are not atomic from the point of view of the host or other devices.

| Arithmetic Functions | Bitwise Functions |

|---|---|

| atomicAdd atomicSub atomicExch atomicMin atomicMax atomicInc atomicDec atomicCAS |

atomicAnd atomicOr atomicXor |

Concurrent Execution between Host and Device

In order to facilitate concurrent execution between host and device, some function calls are asynchronous:

- Kernel launches

- Memory copies between two addresses to the same device memory

- Memory copies from host to device of a memory block of 64 KB or less

- Memory copies performed by functions that are suffixed with Async

- Memory set function calls

Control is returned to the host thread before the device has completed the requested task.

Concurrent Data Transfers

Devices with Compute Capability >= 2.0 can perform a copy from page-locked host memory to device memory concurrently with a copy from device memory to page-locked host memory.

Applications may query this capability by checking the asyncEngineCount device property, which is equal to 2 for devices that support it.

Concurrent Kernel Execution

Devices with compute capability >= 2.0 and can execute multiple kernels concurrently. Applications may query this capability by checking the concurrentKernels device property, which is equal to 1 for devices that support it.

The maximum number of kernel launches that a device can execute concurrently is:

| Compute Capability | Maximum number of concurrent kernels per GPU |

|—|—|—|

| 1.x | not supported |

| 2.x | 16 |

| 3.0, 3.2 | 16 |

| 3.5, 3.7 | 32 |

| 5.0, 5.2 | 32 |

A kernel from one CUDA context cannot execute concurrently with a kernel from another CUDA context.

Overlap of Data Transfer and Kernel Execution

Some devices can perform copies between page-locked host memory and device memory concurrently with kernel execution.

Streams

Applications manage concurrency through streams. A stream is a sequence of commands (possibly issued by different host threads) that execute in order. Different streams, on the other hand, may execute their commands out of order with respect to one another or concurrently.

A stream is defined by creating a stream object and specifying it as the stream parameter to a sequence of kernel launches and host <-> device memory copies.

The amount of execution overlap between two streams depends on the order in which the commands are issued to each stream and whether or not the device supports overlap of data transfer and kernel execution, concurrent kernel execution, and/or concurrent data transfers.

Default Stream

Kernel launches and host <-> device memory copies that do not specify any stream parameter, or equivalently that set the stream parameter to zero, are issued to the default stream. They are therefore executed in order in the default stream.

The relative priorities of streams can be specified at creation. At runtime, as blocks in low-priority schemes finish, waiting blocks in higher-priority streams are scheduled in their place.

Explicit Synchronization

There are various ways to explicitly synchronize streams with each other.

| Synchonization functions | Description |

|---|---|

cudaDeviceSynchronize() |

Waits until all preceding commands in ALL streams of ALL host threads have completed. |

cudaStreamSynchronize() |

Takes a stream as a parameter and waits until all preceding commands in the given stream have completed. It can be used to synchronize the host with a specific stream, allowing other streams to continue executing on the device. |

cudaStreamWaitEvent() |

Takes a stream and an event as parameters and makes all the commands added to the given stream after the call to cudaStreamWaitEvent() delay their execution until the given event has completed. The stream can be 0, in which case all the commands added to any stream after the call to cudaStreamWaitEvent() wait on the event. |

cudaStreamQuery() |

Provides applications with a way to know if all preceding commands in a stream have completed. |

To avoid unnecessary slowdowns, all these synchronization functions are usually best used for timing purposes or to isolate a launch or memory copy that is failing.

Implicit Synchronization

Two commands from different streams cannot run concurrently if any one of the following operations is issued in-between them by the host thread:

- a page-locked host memory allocation,

- a device memory allocation,

- a device memory set,

- a memory copy between two addresses to the same device memory,

- any CUDA command to the NULL stream,

- a switch between the L1/shared memory configurations ( Compute Capability 2.x and 3.x).

For devices that support concurrent kernel execution and are of compute capability 3.0 or lower, any operation that requires a dependency check to see if a streamed kernel launch is complete:

- Can start executing only when all thread blocks of all prior kernel launches from any stream in the CUDA context have started executing;

- Blocks all later kernel launches from any stream in the CUDA context until the kernel launch being checked is complete.

- Operations that require a dependency check include any other commands within the same stream as the launch being checked and any call to

cudaStreamQuery()on that stream.

Therefore, applications should follow these guidelines to improve their potential for concurrent kernel execution:

- All independent operations should be issued before dependent operations,

- Synchronization of any kind should be delayed as long as possible.

Callbacks

The runtime provides a way to insert a callback at any point into a stream via cudaStreamAddCallback(). A callback is a function that is executed on the host once all commands issued to the stream before the callback have completed. Callbacks in stream 0 are executed once all preceding tasks and commands issued in all streams before the callback have completed.

The commands that are issued in a stream (or all commands issued to any stream if the callback is issued to stream 0) after a callback do not start executing before the callback has completed.

A callback must not make CUDA API calls (directly or indirectly), as it might end up waiting on itself if it makes such a call leading to a deadlock.

Events

The runtime also provides a way to closely monitor the device’s progress, as well as perform accurate timing, by letting the application asynchronously record events at any point in the program and query when these events are completed. An event has completed when all tasks — or optionally, all commands in a given stream — preceding the event have completed. Events in stream zero are completed after all preceding tasks and commands in all streams are completed.

Dynamic Parallelism

Dynamic Parallelism enables a CUDA kernel to create and synchronize new nested work, using the CUDA runtime API to launch other kernels, optionally

synchronize on kernel completion, perform device memory management, and create and use streams and events, all without CPU involvement.

Note: Need Compute Capability >= 3.5

Dynamic Parallelism dynamically spawns new threads by adapting to the data without going back to the CPU, greatly simplifying GPU programming and accelerating algorithms.

Hyper-Q

Hyper-Q enables multiple CPU threads or processes to launch work on a single GPU simultaneously,

thereby dramatically increasing GPU utilization and slashing CPU idle times.

This feature increases the total number of “connections” between the host and GPU by

allowing 32 simultaneous, hardware-managed connections, compared to the single

connection available with GPUs without Hyper-Q.

Hyper-Q is a flexible solution that allows connections for both CUDA streams and Message

Passing Interface (MPI) processes, or even threads from within a process. Existing

applications that were previously limited by false dependencies can see a dramatic

performance increase without changing any existing code.

Note: Need Compute Capability >= 3.5

Multi-Device System

A host system can have multiple GPU Devices.

Stream and Event Behavior

- A kernel launch will fail if it is issued to a stream that is not associated to the current device.

- A memory copy will succeed even if it is issued to a stream that is not associated to the current device.

- Each device has its own default stream, so commands issued to the default stream of a device may execute out of order or concurrently with respect to commands issued to the default stream of any other device.

GPU Direct

Accelerated communication with network and storage devices

Network and GPU device drivers can share “pinned” (page-locked) buffers, eliminating the need to make a redundant copy in CUDA host memory.

Peer-to-Peer Transfers between GPUs

Use high-speed DMA transfers to copy data between the memories of two GPUs on the same system/PCIe bus.

Peer-to-Peer memory access

Optimize communication between GPUs using NUMA-style access to memory on other GPUs from within CUDA kernels.

RDMA

Eliminate CPU bandwidth and latency bottlenecks using remote direct memory access (RDMA) transfers between GPUs and other PCIe devices, resulting in significantly improved MPISendRecv efficiency between GPUs and other nodes)

GPUDirect for Video

Optimized pipeline for frame-based devices such as frame grabbers, video switchers, HD-SDI capture, and CameraLink devices.

- GPUDirect version 1 supported accelerated communication with network and storage devices. It was supported by InfiniBand solutions available from Mellanox and others.

- GPUDirect version 2 added support for peer-to-peer communication between GPUs on the same shared memory server.

- GPU Direct RDMA enables RDMA transfers across an Infiniband network between GPUs in different cluster nodes, bypassing CPU host memory altogether.

Using GPUDirect, 3rd party network adapters, solid-state drives (SSDs) and other devices can directly read and write CUDA host and device memory. GPUDirect eliminates unnecessary system memory copies, dramatically lowers CPU overhead, and reduces latency, resulting in significant performance improvements in data transfer times for applications running on NVIDIA products.

ANNEXES

CUDA Libraries

cuBLAS (Basic Linear Algebra Subroutines)

The NVIDIA CUDA Basic Linear Algebra Subroutines (cuBLAS) library is a GPU-accelerated version of the complete standard BLAS library.

- Complete support for all 152 standard BLAS routines

- Single, double, complex, and double complex data types

- Support for CUDA streams

- Fortran bindings

- Support for multiple GPUs and concurrent kernels

- Batched GEMM API

- Device API that can be called from CUDA kernels

- Batched LU factorization API

- Batched matrix inverse API

- New implementation of TRSV (Triangular solve), up to 7x faster than previous implementation.

To learn more about cuBLAS: https://developer.nvidia.com/cuBLAS

cuSPARSE (Sparse Matrix)

The NVIDIA CUDA Sparse Matrix library (cuSPARSE) provides a collection of basic linear algebra subroutines used for sparse matrices that delivers up to 8x faster performance than the latest MKL. The latest release includes a sparse triangular solver.

Supports dense, COO, CSR, CSC, ELL/HYB and Blocked CSR sparse matrix formats

Level 1 routines for sparse vector x dense vector operations

Level 2 routines for sparse matrix x dense vector operations

Level 3 routines for sparse matrix x multiple dense vectors (tall matrix)

Routines for sparse matrix by sparse matrix addition and multiplication

Conversion routines that allow conversion between different matrix formats

Sparse Triangular Solve

Tri-diagonal solver

Incomplete factorization preconditioners ilu0 and ic0

To learn more about cuSPARSE: https://developer.nvidia.com/cuSPARSE

cuSOLVER (Solver)

The NVIDIA cuSOLVER library provides a collection of dense and sparse direct solvers which deliver significant acceleration for Computer Vision, CFD, Computational Chemistry, and Linear Optimization applications.

- cusolverDN: Key LAPACK dense solvers 3-6x faster than MKL.

- Dense Cholesky, LU, SVD, QR

- Applications include: optimization, Computer Vision, CFD

- cusolverSP

- Sparse direct solvers & Eigensolvers

- Applications include: Newton’s method, Chemical Kinetics

- cusolverRF

- Sparse refactorization solver

- Applications include: Chemistry, ODEs, Circuit simulation

To learn more about cuSOLVER: https://developer.nvidia.com/cuSOLVER

cuFFT (Fast Fourier Transformation)

The FFT is a divide-and-conquer algorithm for efficiently computing discrete Fourier transforms of complex or real-valued data sets. It is one of the most important and widely used numerical algorithms in computational physics and general signal processing.

To learn more about cuFFT: https://developer.nvidia.com/cuFFT

cuRAND (Random Number)

The cuRAND library provides facilities that focus on the simple and efficient generation of high-quality pseudorandom and quasirandom numbers. A pseudorandom sequence of numbers satisfies most of the statistical properties of a truly random sequence but is generated by a deterministic algorithm. A quasirandom sequence of n -dimensional points is generated by a deterministic algorithm designed to fill an n-dimensional space evenly.

Random numbers can be generated on the device or on the host CPU.

To learn more about cuRAND: https://developer.nvidia.com/cuRAND

cuDNN (Deep Neural Network)

cuDNN is a GPU-accelerated library of primitives for deep neural networks. It provides highly tuned implementations of routines arising frequently in DNN applications:

- Convolution forward and backward, including cross-correlation

- Pooling forward and backward

- Softmax forward and backward

- Neuron activations forward and backward:

- Rectified linear (ReLU)

- Sigmoid

- Hyperbolic tangent (TANH)

- Tensor transformation functions

To learn more about cuDNN: https://developer.nvidia.com/cuDNN

NPP (NVIDIA Performance Primitive)

NVIDIA Performance Primitive (NPP) is a library of functions for performing CUDA accelerated processing. The initial set of

functionality in the library focuses on imaging and video processing and is widely applicable for developers

in these areas. NPP will evolve over time to encompass more of the compute heavy tasks in a variety of problem domains.

- Eliminates unnecessary copying of data to/from CPU memory

- Process data that is already in GPU memory

- Leave results in GPU memory so they are ready for subsequent processing

- Data Exchange and Initialization

- Set, Convert, Copy, CopyConstBorder, Transpose, SwapChannels

- Arithmetic and Logical Operations

- Add, Sub, Mul, Div, AbsDiff, Threshold, Compare

- Color Conversion

- RGBToYCbCr, YcbCrToRGB, YCbCrToYCbCr, ColorTwist, LUT_Linear

- Filter Functions

- FilterBox, Filter, FilterRow, FilterColumn, FilterMax, FilterMin, Dilate, Erode, SumWindowColumn, SumWindowRow

- JPEG

- DCTQuantInv, DCTQuantFwd, QuantizationTableJPEG

- Geometry Transforms

- Mirror, WarpAffine, WarpAffineBack, WarpAffineQuad, WarpPerspective, WarpPerspectiveBack , WarpPerspectiveQuad, Resize

- Statistics Functions

- Mean_StdDev, NormDiff, Sum, MinMax, HistogramEven, RectStdDev

To learn more about NPP: https://developer.nvidia.com/NPP

Atomic Functions

| FUNCTION | DESCRIPTION |

|---|---|

| Arithmetic | |

| atomicAdd | int atomicAdd(int* address, int val) int atomicAdd(int* address, int val) unsigned int atomicAdd(unsigned int* address, unsigned int val) unsigned long long int atomicAdd(unsigned long long int* address, unsigned long long int val) float atomicAdd(float* address, float val)

Reads the 32-bit or 64-bit word old located at the address |

| atomicSub | int atomicSub(int* address, int val) unsigned int atomicSub(unsigned int* address, unsigned int val)

Reads the 32-bit word |

| atomicExch | int atomicExch(int* address, int val) unsigned int atomicExch(unsigned int* address, unsigned int val) unsigned long long int atomicExch(unsigned long long int* address, unsigned long long int val) float atomicExch(float* address, float val)

Reads the 32-bit or 64-bit word |

| atomicMin | int atomicMin(int* address, int val) unsigned int atomicMin(unsigned int* address, unsigned int val) unsigned long long int atomicMin(unsigned long long int* address, unsigned long long int val)

Reads the 32-bit or 64-bit word |

| atomicMax | int atomicMax(int* address, int val) unsigned int atomicMax(unsigned int* address, unsigned int val) unsigned long long int atomicMax(unsigned long long int* address, unsigned long long int val)

Reads the 32-bit or 64-bit word old located at the address |

| atomicInc | unsigned int atomicInc(unsigned int* address, unsigned int val)

Reads the 32-bit word old located at the address |

| atomicDec | unsigned int atomicDec(unsigned int* address, unsigned int val)

Reads the 32-bit word |

| atomicCAS | int atomicCAS(int* address, int compare, int val) unsigned int atomicCAS(unsigned int* address, unsigned int compare, unsigned int val) unsigned long long int atomicCAS(unsigned long long int* address, unsigned long long int compare, unsigned long long int val)

Reads the 32-bit or 64-bit word |

| Bitwise | |

| atomicAnd | int atomicAnd(int* address, int val)

Reads the 32-bit or 64-bit word |

| atomicOr | int atomicOr(int* address, int val)

Reads the 32-bit or 64-bit word |

| atomicXor | int atomicXor(int* address, int val)

Reads the 32-bit or 64-bit word |

Sample code in adding 2 numbers with a GPU

Terminology: Host (a CPU and host memory), device (a GPU and device memory).

This sample code adds 2 numbers together with a GPU:

- Define a kernel (a function to run on a GPU).

- Allocate & initialize the host data.

- Allocate & initialize the device data.

- Invoke a kernel in the GPU.

- Copy kernel output to the host.

- Cleanup.

Define a kernel

Use the keyword __global__ to define a kernel. A kernel is a function to be run on a GPU instead of a CPU. This kernel adds 2 numbers (a) & (b) and store the result in (c).

// Kernel definition

// Run on GPU

// Adding 2 numbers and store the result in c

__global__ void add(int *a, int *b, int *c)

{

*c = *a + *b;

}

Allocate & initialize host data

In the host, allocate the input and output parameters for the kernel call, and initiate all input parameters.

int main(void) {

// Allocate & initialize host data - run on the host

int a, b, c; // host copies of a, b, c

a = 2;

b = 7;

...

}

Allocate and copy host data to the device

A CUDA application manages the device space memory through calls to the CUDA runtime. This includes device memory allocation and deallocation as well as data transfer between the host and device memory.

We allocate space in the device so we can copy the input of the kernel ((a) & (b)) from the host to the device. We also allocate space to copy result from the device to the host later.

int main(void) {

...

int *d_a, *d_b, *d_c; // device copies of a, b, c

// Allocate space for device copies of a, b, c

cudaMalloc((void **)&d_a, size);

cudaMalloc((void **)&d_b, size);

cudaMalloc((void **)&d_c, size);

// Copy a & b from the host to the device

cudaMemcpy(d_a, &a, size, cudaMemcpyHostToDevice);

cudaMemcpy(d_b, &b, size, cudaMemcpyHostToDevice);

...

}

Invoke the kernel

Invoke the kernel add with parameters for (a, b, c.)

int main(void) {

...

// Launch add() kernel on GPU with parameters (d_a, d_b, d_c)

add<<<1,1>>>(d_a, d_b, d_c);

...

}

To provide data parallelism, a multithreaded CUDA application is partitioned into blocks of threads that execute independently (and often concurrently) from each other. Each parallel invocation of add is referred to as a block. Each block have multiple threads. These block of threads can be scheduled on any of the available streaming multiprocessors (SM) within a GPU. In our simple example, since we just add one pair of numbers, we only need 1 block containing 1 thread (<<<1,1>>>).

In contrast to a regular C function call, a kernel can be executed N times in parallel by M CUDA threads (<<<N, M>>>). On current GPUs, a thread block may contain up to 1024 threads.

Copy kernel output to the host

Copy the addition result from the device to the host:

// Copy result back to the host

cudaMemcpy(&c, d_c, size, cudaMemcpyDeviceToHost);

Clean up

Clean up memory:

// Cleanup

cudaFree(d_a); cudaFree(d_b); cudaFree(d_c);

Putting together: Heterogeneous Computing

In CUDA, we define a single file to run both the host and the device code.

nvcc add.cu # Compile the source code

a.out # Run the code.

The following is the complete source code for our example.

// Kernel definition

// Run on GPU

__global__ void add(int *a, int *b, int *c) {

*c = *a + *b;

}

int main(void) {

// Allocate & initialize host data - run on the host

int a, b, c; // host copies of a, b, c

a = 2;

b = 7;

int *d_a, *d_b, *d_c; // device copies of a, b, c

// Allocate space for device copies of a, b, c

int size = sizeof(int);

cudaMalloc((void **)&d_a, size);

cudaMalloc((void **)&d_b, size);

cudaMalloc((void **)&d_c, size);

// Copy a & b from the host to the device

cudaMemcpy(d_a, &a, size, cudaMemcpyHostToDevice);

cudaMemcpy(d_b, &b, size, cudaMemcpyHostToDevice);

// Launch add() kernel on GPU

add<<<1,1>>>(d_a, d_b, d_c);

// Copy result back to the host

cudaMemcpy(&c, d_c, size, cudaMemcpyDeviceToHost);

// Cleanup

cudaFree(d_a); cudaFree(d_b); cudaFree(d_c);

return 0;

}

CUDA logical model

add<<<4,4>>>(d_a, d_b, d_c);

A CUDA applications composes of multiple blocks of threads (a grid) with each thread calls a kernel once.

In the second example, we have 6 blocks and 12 threads per block.

(source: Nvidia)

GPU physical model

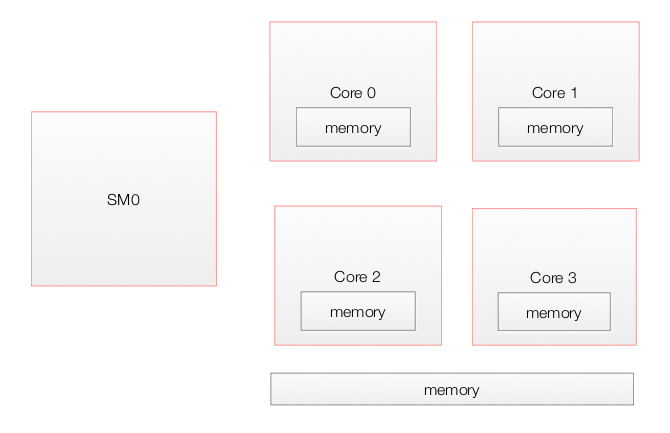

A GPU composes of many Streaming Multiprocessors (SMs) with a global memory accessible by all SMs and a local memory.

Each SM contains multiple cores which share a shared memory as well as one local to itself.

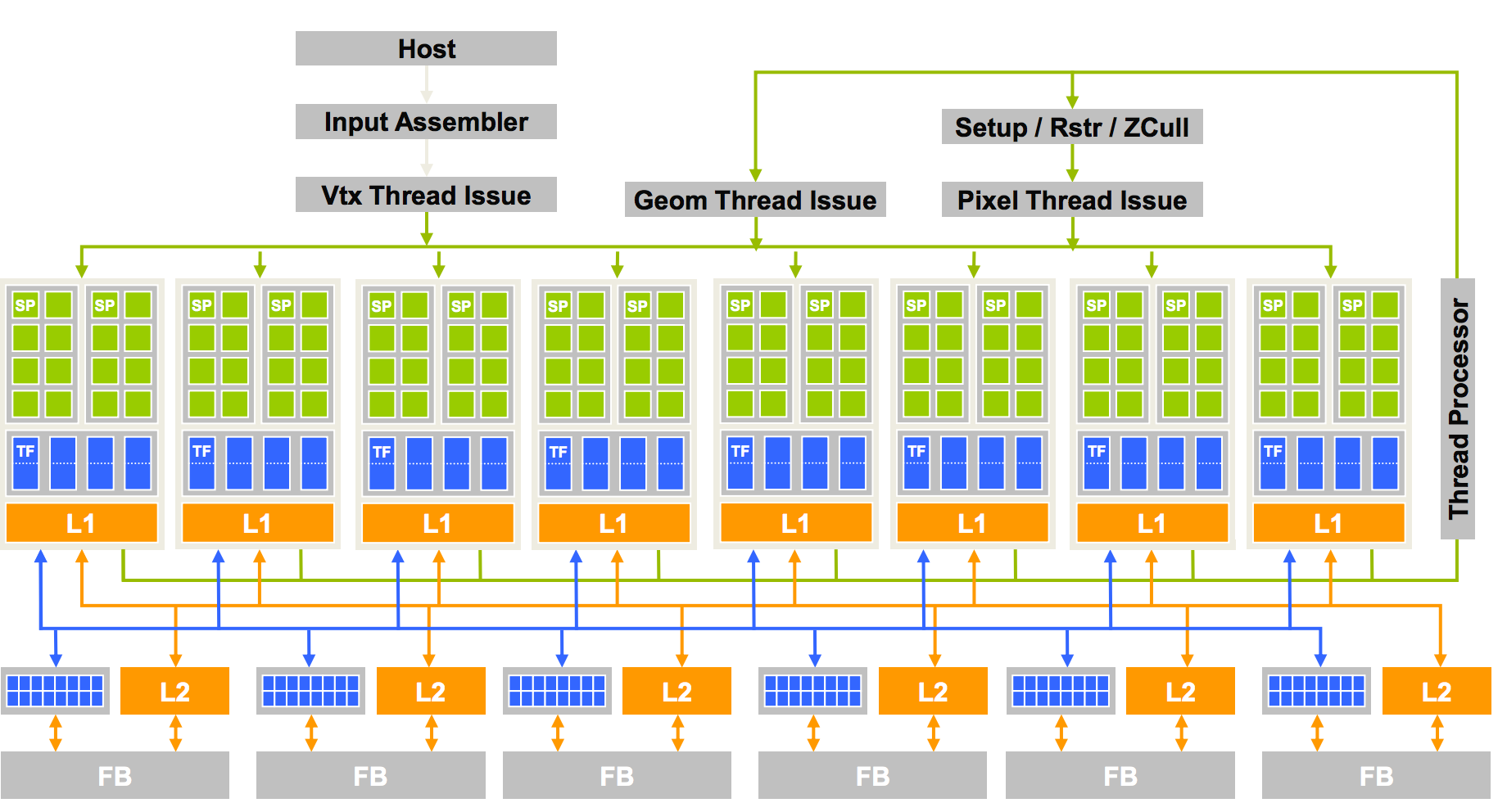

Here is the architect for GeoForce 8800 with 16 SMs each with 8 cores (Streaming processing SP).

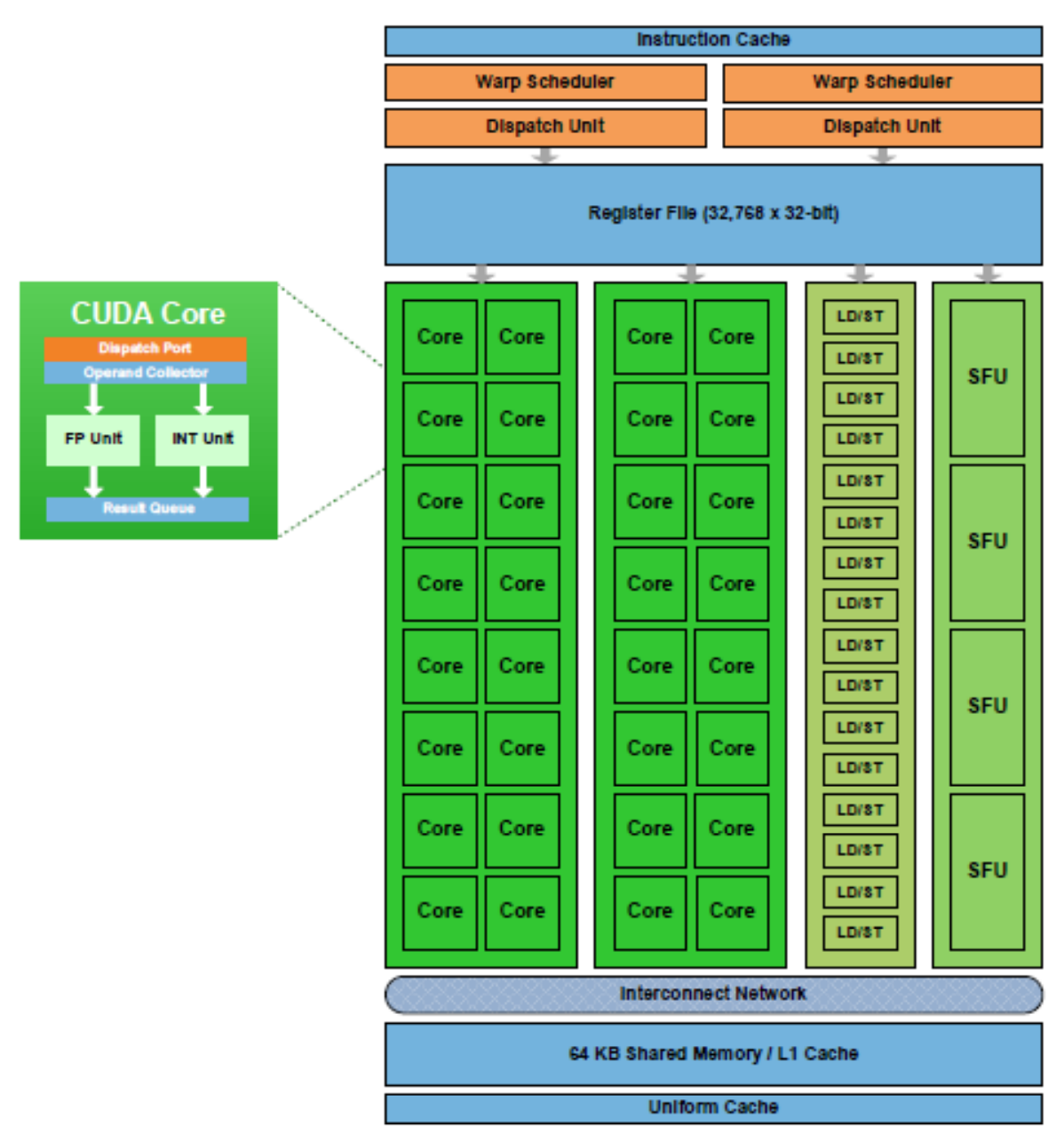

A SM in the Fermi architecture:

Execution model

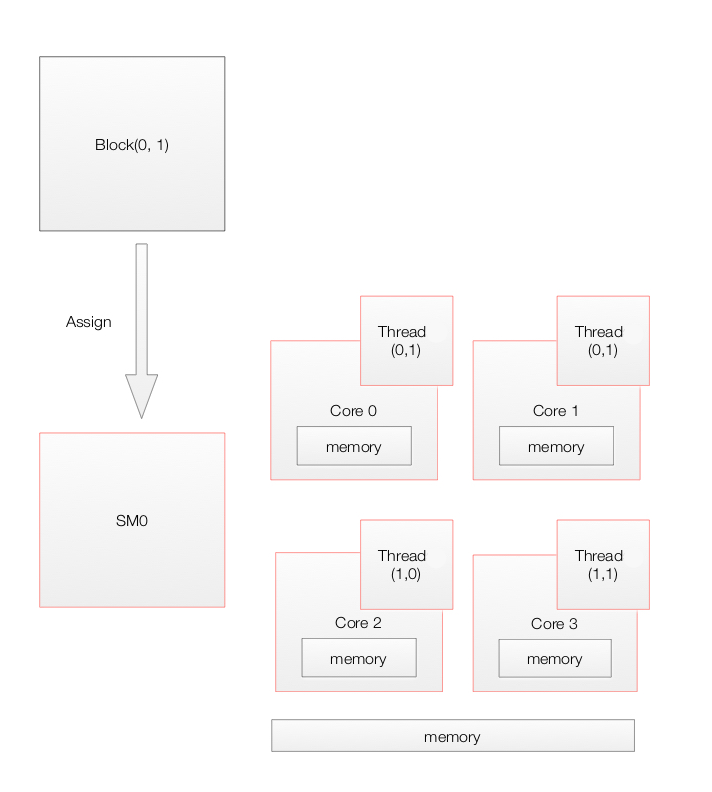

Device level

When a CUDA application on the host invokes a kernel grid, the blocks of the grid are enumerated and a global work distribution engine assign them to SM with available execution capacity. Threads of the same block always run on the same SM. Multiple thread blocks and multiple threads in a thread block can execute concurrently on one SM. As thread blocks terminate, new blocks are launched on the vacated multiprocessors.

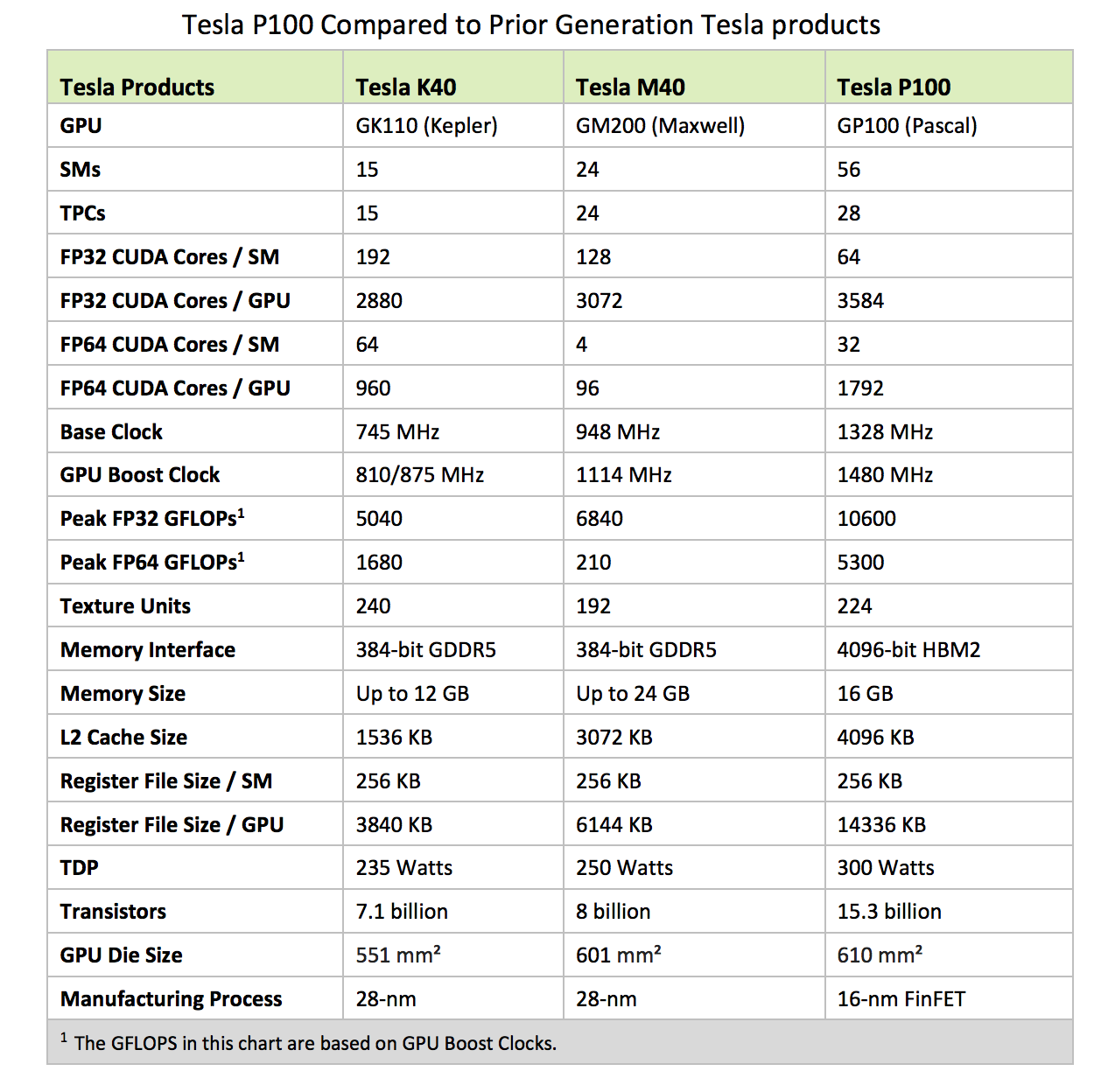

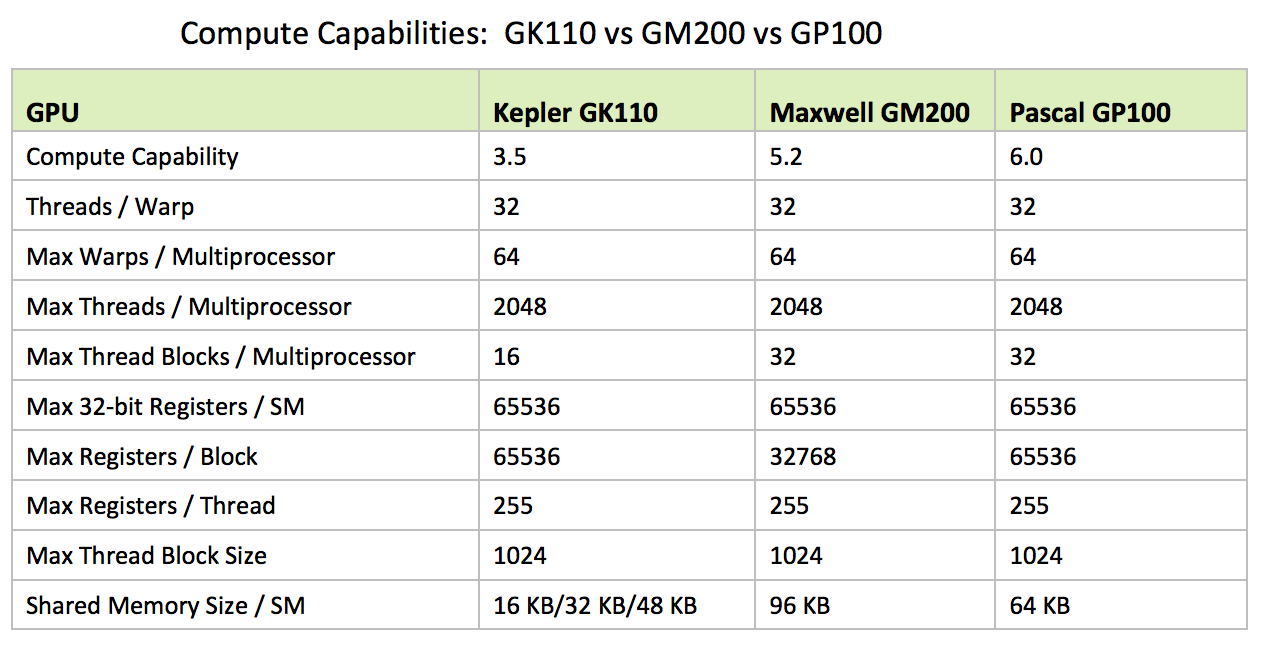

All threads in a grid execute the same kernel. GPU can handle multiple kernels from the same application simultaneously. Pascal GP100 can handle maximum of 32 thread blocks and 2048 threads per SM.

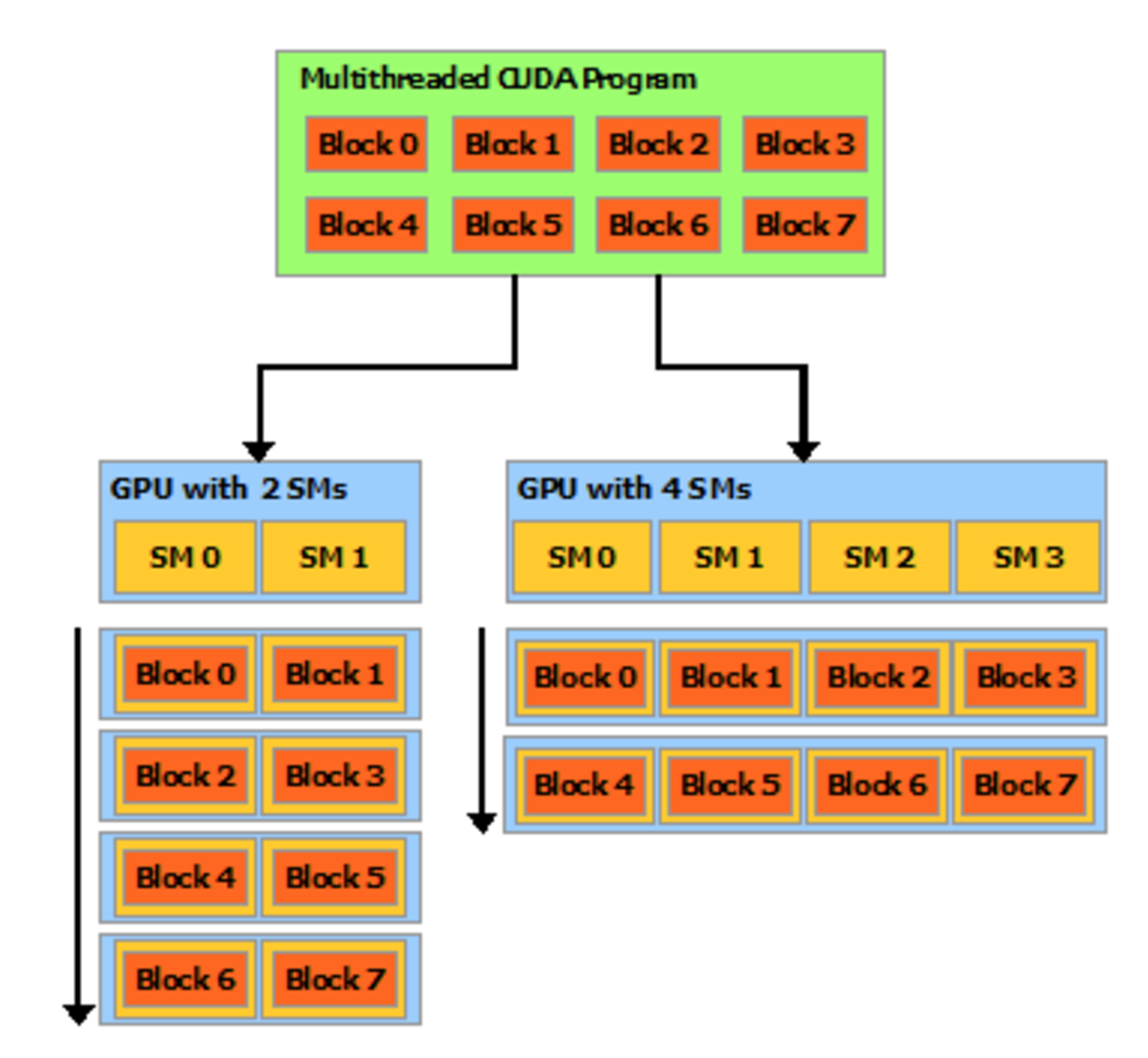

Here, we have a CUDA application composes of 8 blocks. It can be executed on a GPU with 2 SMs or 4SMs. With 4 SMs, block 0 & 4 is assigned to SM0, block 1, 5 to SM1, block 2, 6 to SM2 and block 3, 7 to SM3.

(source: Nvidia)

The entire device can only process one single application at a time and switch between applications is slow.

SM level

Once a block of threads is assigned to a SM, the threads are divided into units called warps. A block is partitioned into warps with each warp contains threads of consecutive, increasing thread IDs with the first warp containing thread 0.The size of a warp is determined by the hardware implementation. A warp scheduler selects a warp that is ready to execute its next instruction. In Fremi architect, the warp scheduler schedule a warp of 32 threads. Each warp of threads runs the same instruction. In the diagram below, we have 2 dispatch unit. Each one runs a different warp. In each warp, it runs the same instruction. When the threads in a warp is wait for the previous instruction to complete, the warp scheduler will select another warp to execute. Two warps from different blocks or different kernels can be executed concurrently.

Branch divergence

A warp executes one common instruction at a time. Each core (SP) run the same instruction for each threads in a warp. To execute a branch like:

if (a[index]==0)

a[index]++;

else

a[index]--;

SM skips execution of a core subjected to the branch conditions:

| c0 (a=3) | c1(a=3) | c2 (a=-3) | c3(a=7) | c4(a=2) | c5(a=6) | c6 (a=-2) | c7 (a=-1) | |

| if a[index]==0 | ↓ | ↓ | ↓ | ↓ | ↓ | ↓ | ↓ | ↓ |

| a[index]++ | ↓ | ↓ | ↓ | ↓ | ↓ | |||

| a[index]– | ↓ | ↓ | ↓ |

So full efficiency is realized when all 32 threads of a warp branch to the same execution path. If threads of a warp diverge via a data-dependent conditional branch, the warp serially executes each branch path taken, disabling threads that are not on that path, and when all paths complete, the threads converge back to the same execution path.

To maximize throughput, all threads in a warp should follow the same control-flow. Program can be rewritten such that threads within a warp branch to the same code:

if (a[index]<range)

... // More likely, threads with a warp will branch the same way.

else

...

is preferred over

if (a[index]%2==0)

...

else

...

For loop unrolling is another technique to avoid branching:

for (int i=0; i<4; i++)

c[i] += a[i];

c[0] = a[0] + a[1] + a[2] + a[3];

Memory model

Every SM has a shared memory accessible by all threads in the same block. Each thread has its own set of registers and local memory. All blocks can access a global memory, a constant memory(read only) and a texture memory. (read only memory for spatial data.)

Local, Global, Constant, and Texture memory all reside off chip. Local, Constant, and Texture are all cached. Each SM has a L1 cache for global memory references. All SMs share a second L2 cache. Access to the shared memory is in the TB/s. Global memory is an order of magnitude slower. Each GPS has a constant memory for read only with shorter latency and higher throughput. Texture memory is read only.

| Type | Read/write | Speed |

|---|---|---|

| Global memory | read and write | slow, but cached |

| Texture memory | read only | cache optimized for 2D/3D access pattern |

| Constant memory | read only | where constants and kernel arguments are stored |

| Shared memory | read/write | fast |

| Local memory | read/write | used when it does not fit in to registers part of global memory slow but cached |

| Registers | read/write | fast |

Local memory is just thread local global memory. It is much slower than either registers or shared memory.

Speed (Fast to slow):

- Register file

- Shared Memory

- Constant Memory

- Texture Memory

- (Tie) Local Memory and Global Memory

| Declaration | Memory | Scope | Lifetime |

| int v | register | thread | thread |

| int vArray[10] | local | thread | thread |

| __shared__ int sharedV | shared | block | block |

| __device__ int globalV | global | grid | application |

| __constant__ int constantV | constant | grid | application |

When threads in a warp load data from global memory, the system detects whether they are

consecutive. It combines consecutive accesses into one single access to DRAM.

Shared memory

Shared memory is on-chip and is much faster than local and global memory. Shared memory latency is roughly 100x lower than uncached global memory latency. Threads can access data in shared memory loaded from global memory by other threads within the same thread block. Memory access can be controlled by thread synchronization to avoid race condition (__syncthreads). Shared memory can be uses as user-managed data caches and high parallel data reductions.

Static shared memory:

#include

__global__ void staticReverse(int *d, int n)

{

__shared__ int s[64];

int t = threadIdx.x;

int tr = n-t-1;

s[t] = d[t];

// Will not conttinue until all threads completed.

__syncthreads();

d[t] = s[tr];

}

int main(void)

{

const int n = 64;

int a[n], r[n], d[n];

for (int i = 0; i < n; i++) {

a[i] = i;

r[i] = n-i-1;

d[i] = 0;

}

int *d_d;

cudaMalloc(&d_d, n * sizeof(int));

// run version with static shared memory

cudaMemcpy(d_d, a, n*sizeof(int), cudaMemcpyHostToDevice);

staticReverse<<<1,n>>>(d_d, n);

cudaMemcpy(d, d_d, n*sizeof(int), cudaMemcpyDeviceToHost);

for (int i = 0; i < n; i++)

if (d[i] != r[i]) printf("Error: d[%d]!=r[%d] (%d, %d)n", i, i, d[i], r[i]);

}

The __syncthreads() is light weighted and a block level synchronization barrier. __syncthreads() ensures all threads have completed before continue.

Dynamic Shared Memory:

#include

__global__ void dynamicReverse(int *d, int n)

{

// Dynamic shared memory

extern __shared__ int s[];

int t = threadIdx.x;

int tr = n-t-1;

s[t] = d[t];

__syncthreads();

d[t] = s[tr];

}

int main(void)

{

const int n = 64;

int a[n], r[n], d[n];

for (int i = 0; i < n; i++) {

a[i] = i;

r[i] = n-i-1;

d[i] = 0;

}

int *d_d;

cudaMalloc(&d_d, n * sizeof(int));

// run dynamic shared memory version

cudaMemcpy(d_d, a, n*sizeof(int), cudaMemcpyHostToDevice);

dynamicReverse<<<1,n,n*sizeof(int)>>>(d_d, n);

cudaMemcpy(d, d_d, n * sizeof(int), cudaMemcpyDeviceToHost);

for (int i = 0; i < n; i++)

if (d[i] != r[i]) printf("Error: d[%d]!=r[%d] (%d, %d)n", i, i, d[i], r[i]);

}

Shared memory are accessable by multiple threads. To reduce potential bottleneck, shared memory is divided into logical banks. Successive sections of memory are assigned to successive banks. Each bank services only one thread request at a time, multiple simultaneous accesses from different threads to the same bank result in a bank conflict (the accesses are serialized).

Shared memory banks are organized such that successive 32-bit words are assigned to successive banks and the bandwidth is 32 bits per bank per clock cycle. For devices of compute capability 1.x, the warp size is 32 threads and the number of banks is 16. There will be 2 requests for the warp: one for the first half and second for the second half. For devices of compute capability 2.0, the warp size is 32 threads and the number of banks is also 32. So for the best case, we need only 1 request.

For devices of compute capability 3.x, the bank size can be configured by cudaDeviceSetSharedMemConfig() to either four bytes (default) or eight bytes. 8-bytes avoids shared memory bank conflicts when accessing double precision data.

On devices of compute capability 2.x and 3.x, each multiprocessor has 64KB of on-chip memory that can be partitioned between L1 cache and shared memory.

For devices of compute capability 2.x, there are two settings:

- 48KB shared memory and 16KB L1 cache, (default)

- and 16KB shared memory and 48KB L1 cache.

This can be configured during runtime API from the host for all kernels using cudaDeviceSetCacheConfig() or on a per-kernel basis using cudaFuncSetCacheConfig().

Constant memory

SM aggressively cache constant memory which results in short latency.

__constant__ float M[10];

...

cudaMemcpyToSymbol(...);

Blocks and threads

CUDA uses blocks and threads to provide data parallelism. CUDA creates multiple blocks and each block has multiple threads. Each thread calls the same kernel to process a section of the data.

(source: Nvidia)

Here, we change our sample code to add 1024×1024 numbers together but the kernel remains almost the same by adding just one pair of number. To add all the numbers, we therefore create 4096 blocks with 256 threads per block.

[text{# of numbers} = (text{# of blocks}) times (text{# threads/block}) times (text{# of additions in the kernel})]

[1024 times 1024 = 4096 times 256 times 1]

Each thread that executes the kernel is given a unique Bock ID & thread ID that is accessible within the kernel through the built-in blockIdx.x and threadIdx,x variable. We use this index to locate which pairs of number we want to add in the kernel.

[index_{data} = index_{block} * text{ (# of threads per block)} + index_{thread}]

__global__ void add(int *a, int *b, int *c)

{

// blockIdx.x is the index of the block.

// Each block has blockDim.x threads.

// threadIdx.x is the index of the thead.

// Each thread can perform 1 addition.

// a[index] & b[index] are the 2 numbers to add in the current thread.

int index = blockIdx.x * blockDim.x + threadIdx.x;

c[index] = a[index] + b[index];

}

We change the program to add 1024×1024 numbers together with 256 threads per block:

#define N (1024*1024)

#define THREADS_PER_BLOCK 256

int main(void) {

int *a, *b, *c;

// Alloc space for host copies of a, b, c and setup input values

a = (int *)malloc(size); random_ints(a, N);

b = (int *)malloc(size); random_ints(b, N);

c = (int *)malloc(size);

int *d_a, *d_b, *d_c;

int size = N * sizeof(int);

// Alloc space for device copies of a, b, c

cudaMalloc((void **)&d_a, size);

cudaMalloc((void **)&d_b, size);

cudaMalloc((void **)&d_c, size);

// Copy inputs to device

cudaMemcpy(d_a, a, size, cudaMemcpyHostToDevice);

cudaMemcpy(d_b, b, size, cudaMemcpyHostToDevice);

// Launch add() kernel on GPU

add<<<N/THREADS_PER_BLOCK,THREADS_PER_BLOCK>>>(d_a, d_b, d_c);

// Copy result back to host

cudaMemcpy(c, d_c, size, cudaMemcpyDeviceToHost);

// Cleanup

free(a); free(b); free(c);

cudaFree(d_a); cudaFree(d_b); cudaFree(d_c);

return 0;

}

Here we invoke the kernel with multiple blocks and multiple threads per block.

// Launch add() kernel on GPU

// Use N/THREADS_PER_BLOCK blocks and THREADS_PER_BLOCK threads per block

add<<<N/THREADS_PER_BLOCK,THREADS_PER_BLOCK>>>(d_a, d_b, d_c);

Threads & shared memory

Why do we need threads when we have blocks? CUDA threads have access to multiple memory spaces with different performance. Each thread has its own local memory. Each thread block has shared memory visible to all threads of the block and with the same lifetime as the block. All threads have access to the same global memory. Data access for the shared memory is faster than the global memory. Data is copy from the host to the global memory in the GPU first. All threads in a block run on the same multiprocessor. Hence, to reduce memory latency, we can copy all the data needed for a block from the global memory to the shared memory.

Use __shared__ to declare a variable using the shared memory:

__global__ void add(int *a, int *b, int *c)

{

__shared__ int temp[1000];

}

Shared memory speeds up performance in particular when we need to access data frequently. Here, we create a new kernel stencil which add all its neighboring data within a radius.

[out = in_{k-radius} + in_{k-radius+1} + dots + in_{k} + in_{k+radius-1} + in_{k+radius}]

We read all data needed in a block to a shared memory. With a radius of 7 and a block with index from 512 to 1023, we need to read data from 505 to 1030.

#define RADIUS 7

#define BLOCK_SIZE 512

__global__ void stencil(int *in, int *out)

{

__shared__ int temp[BLOCK_SIZE + 2 * RADIUS];

int gindex = threadIdx.x + blockIdx.x * blockDim.x;

int lindex = threadIdx.x + RADIUS;

// Read input elements into shared memory

temp[lindex] = in[gindex];

// At both end of a block, the sliding window moves beyond the block boundary.

// E.g, for thread id = 512, we wiil read in[505] and in[1030] into temp.

if (threadIdx.x < RADIUS) {

temp[lindex - RADIUS] = in[gindex - RADIUS];

temp[lindex + BLOCK_SIZE] = in[gindex + BLOCK_SIZE];

}

// Apply the stencil

int result = 0;

for (int offset = -RADIUS ; offset <= RADIUS ; offset++)

result += temp[lindex + offset];

// Store the result

out[gindex] = result;

}

We must make sure the shared memory is smaller than the available physical shared memory.

Thread synchronization

The code in the last section has a fatal data racing problem. Data is not stored in the shared memory before accessing it. For example, to compute the result for say thread 20, we need to access (temp) corresponding to (in[13]) to (in[27]).

for (int offset = -RADIUS ; offset <= RADIUS ; offset++)

result += temp[lindex + offset]; // Data race problem here.

However, thread 27 is responsible for loading (temp) with (in[27]). Since threads are executed in parallel with no guarantee of order, we may compute the result for thread 20 before thread 27 stores (in[27]) into (temp).

if (threadIdx.x < RADIUS) {

temp[lindex - RADIUS] = in[gindex - RADIUS];

temp[lindex + BLOCK_SIZE] = in[gindex + BLOCK_SIZE];

}